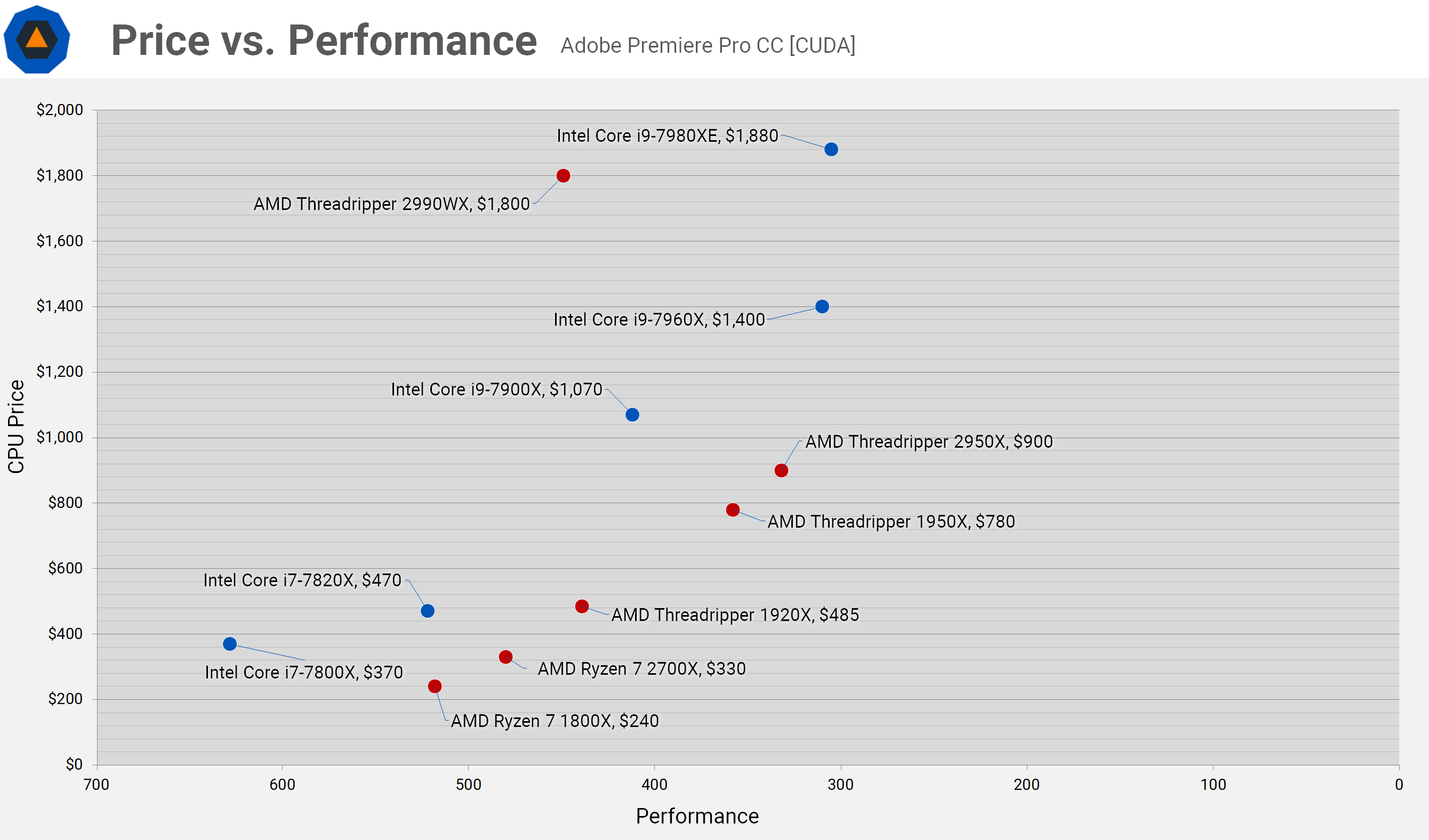

What just happened? After the 16-core Threadripper 2950X launched with consistently great performance, AMD fans couldn't have been more excited for the 32-core 2990WX... only to be disappointed when it finally released with far worse value and versatility. As suspected all along, if a new report can be fully confirmed, a bug in the Windows scheduler is halving 2990WX's performance and this could be fixable via software.

In TechSpot's benchmarking session, we found that the 2990WX was 35% slower than the 2950X in Adobe Premiere Pro, 30% slower in Handbrake and 25% slower in 7-Zip File Compression. That's not to say it didn't have its place, however, as it was 40-50% faster in Corona and Blender rendering tests. In the end, we concluded, "we feel many like us will be scraping their 2990WX plans, and most will be moving to the 2950X instead."

The tech community almost unanimously concluded that the issue was the memory controller configuration employed by AMD. The 2950X had two 8-core dies and two memory controllers, granting the Infinity Fabric between the dies roughly 50 Gbps throughput with 3200Mhz memory. The 2990WX has four 8-core dies, but the same two memory controllers - essentially halving the speed. It seemed logical that this was the problem, as the performance deficit only showed up in memory sensitive applications.

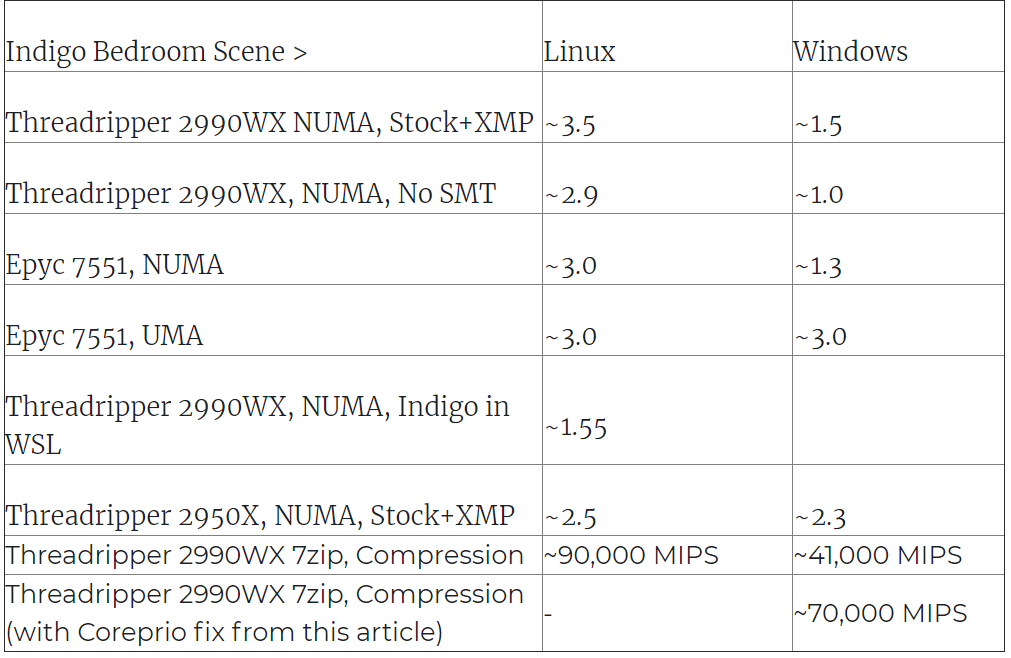

Wendell at LevelOneTech questioned this explanation, however, because the 2990WX performance was very different in Linux. Looking for a better explanation, he conducted a wide battery of tests using the Indigo bedroom render benchmark. He used two processors; the 2990WX and its EPYC counterpart, the 32-core 7551. He discovered that the vast majority of performance woes were caused by a Windows bug, rather than memory issues.

The main difference between the Threadripper and the EPYC is that the EPYC has four memory controllers, which should alleviate the supposed memory performance problems. With one memory controller per die, the EPYC also can switch between UMA (Uniform Memory Access) and NUMA (Non-Uniform Memory Access).

UMA gives each die greater memory bandwidth but introduces latency because memory requests may not go to the nearest controller. NUMA reduces latency by pairing each die with a controller, but it reduces bandwidth. Note, the 2990WX can only operate in NUMA because there are two dies per controller.

| Threadripper 2990WX | EPYC 7551 NUMA | EPYC 7551 UMA | |

| Windows 10 | 1.5 | 1.3 | 3.0 |

| Linux | 3.5 | 3.0 | 3.0 |

| Linux on Windows 10 | 1.6 | N/A | N/A |

The fact that the 2990WX gets good performance in Linux but bad performance in Windows suggests that it's not a hardware issue, and the same situation with the 7551 in NUMA reinforces that idea. Equally bad performance in Windows and Linux on Windows (using the Windows Subsystem for Linux to run the Linux version of Indigo on Windows) demonstrates that it isn't a difference in the two versions of the application that change the performance.

Similarly bad performance between the 2990WX and 7551 on NUMA in Windows shows that it isn't the Threadripper's shortage of memory controllers that's the issue because the 7551 has twice as many. (The 7551 performs slightly worse probably because it's clocked slower.)

The 7551's impressive performance in Windows on UMA could suggest that the applications are simply UMA optimized, but the identical performance between UMA and NUMA modes in Linux discredits that theory.

The only thing left then is Windows itself. To check if this was the case Wendell and Jeremy at Bitsum analyzed the Windows internal thread management software tags and discovered that all the threads spawned by the Indigo benchmark had "ideal CPU" tags that would send the process to a particular core.

Normally this would be good as cores closer to the memory controller can achieve better performance, but Windows wasn't sending the threads there. Instead, it was throwing them around basically randomly. Windows was so busy reorganizing threads pointlessly, that it was expending 50% of the processor's power just shuffling threads around.

This is why the 7551 could double its performance when switching between UMA and NUMA in Windows: on UMA, Windows assumes that all the cores are equal because they're all paired up with a memory controller. On NUMA the bug kicks into effect and it goes back to shuffling.

Jeremy at Bitsum has implemented a fix in the CorePrio utility, which you can download now to check out the performance improvement. Tentatively titled the 'NUMA Dissociator' the fix essentially creates a phony UMA mode, where Windows no longer cares about sending the threads to an 'ideal' core. In Indigo the performance doubles to 3.0, and in 7-Zip compression, the performance jumps an impressive 71%.

Notably, though, the 2990WX is still 22% slower in Windows than Linux in 7-Zip, even with the fix. It's possible that a properly implemented solution by Microsoft could narrow the gap even further. Considering how lazy Microsoft has been in not even searching for the problem, however, I wouldn't be too optimistic that one is coming.