What just happened? Nvidia has revealed the first PCI-Express variant of the Ampere A100 GPU that it unveiled last month, but gamers shouldn't get excited: it's designed to accelerate development in the fields of AI, data science, and supercomputing.

Nvidia finally showed off its new 7nm Ampere architecture last month in the form of the A100 GPU. Now, the company has announced a number of A100 powered systems from leading server manufacturers, including Asus, Dell, Cisco, Lenovo, and more.

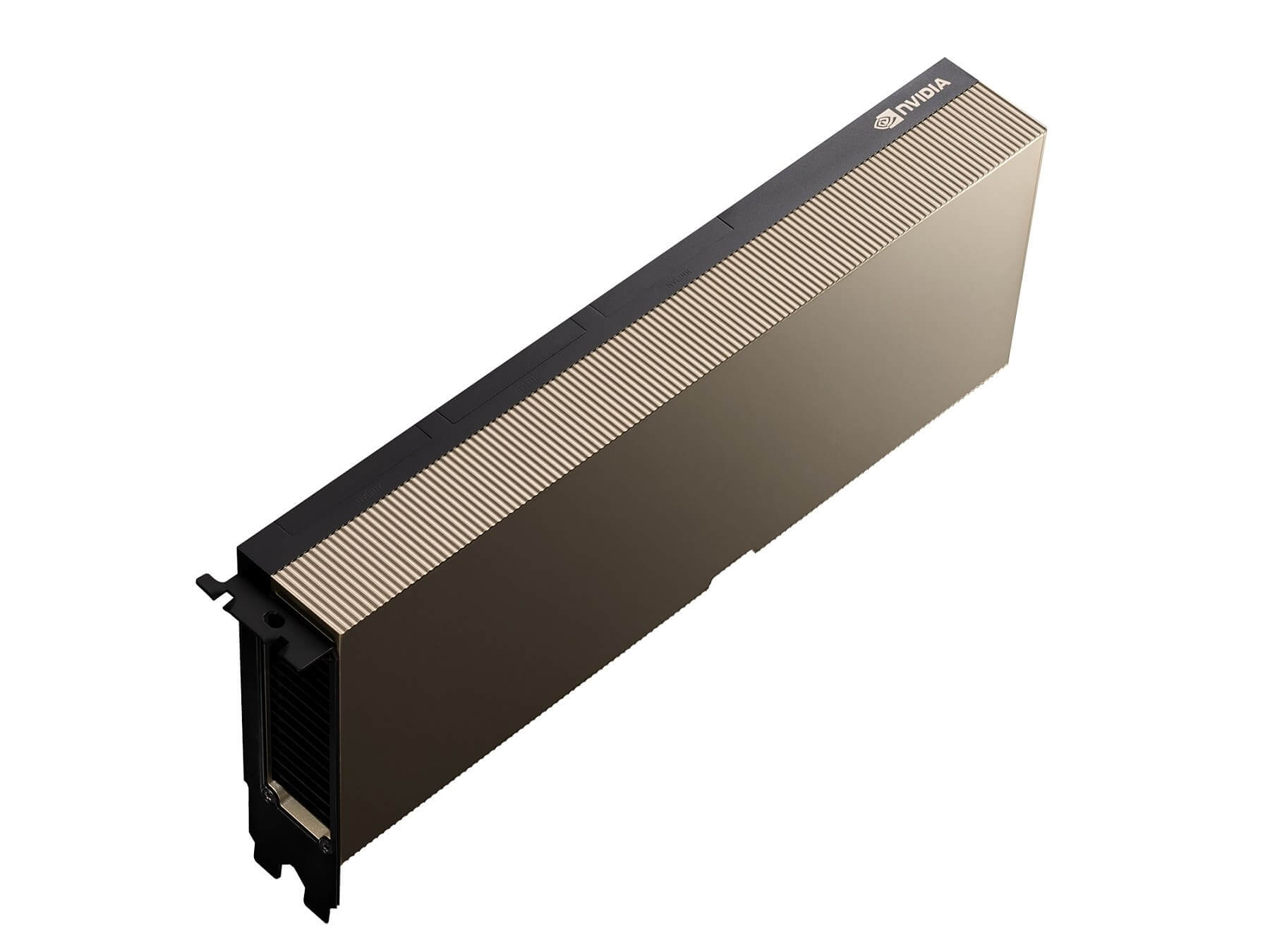

Nvidia has also revealed a PCI-Express variant of the A100. It has the same 6912 CUDA cores, 54 billion transistors, six HBM2 memory stacks, and 40GB of memory as the SXM4 version, but the new connectivity allows for easy installation into existing systems.

As noted by ComputerBase (via Tom's Hardware), there are some drawbacks to switching to PCIe 4.0. The TDP in this variant drops from 400W to 250W, which reportedly means a 10 to 50 percent performance penalty based on the workload, with the GPU more suited to short bursts rather than sustained loads.

There's expected to be 30 A100-powered server systems available this summer, and over 20 more by the end of the year.

"Adoption of NVIDIA A100 GPUs into leading server manufacturers' offerings is outpacing anything we've previously seen," said Ian Buck, VP and GM of Accelerated Computing at Nvidia. "The sheer breadth of NVIDIA A100 servers coming from our partners ensures that customers can choose the very best options to accelerate their data centers for high utilization and low total cost of ownership."

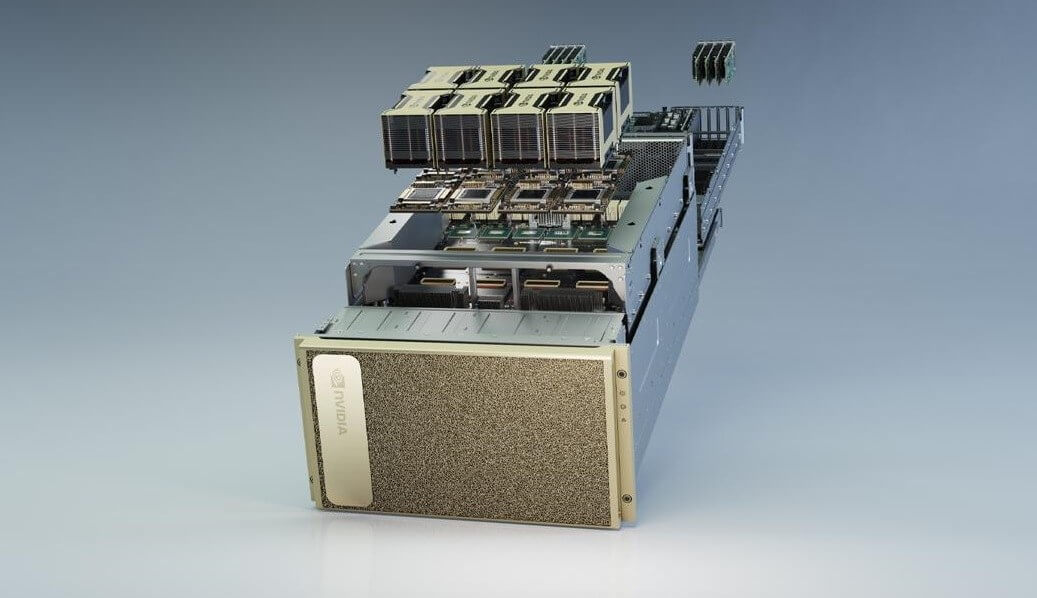

Thanks to Ampere's improvements, the A100 can boost performance by up to 20x over its Volta-based predecessor. It features multi-instance GPU tech, allowing a single A100 to be partitioned into as many as seven separate GPUs to handle different computing tasks. There's also 3rd-gen NVLink, which enables several GPUs to be joined into one giant GPU, and new structural sparsity capabilities that can be used to double a GPU's performance.

In a separate announcement, Nvidia said it has just smashed the record for the big data analytics benchmark, known as TPCx-BB. Using sixteen of its DGX A100 systems, which add up to a total of 128 A100 GPUs, the company ran the benchmark in 14.5 minutes. The previous record was 4.7 hours.