Why it matters: Part of Google's presentation for its new Pixel 6 and Pixel 6 Pro phones focused on the company's efforts to address bias in camera technology. Real Tone is an effort to make the Pixel 6 camera more accurately light people with darker skin tones.

One of Google's blog posts that came with the Pixel 6 presentation, along with the announcement page for Real Tone, both admit that Google in the past has had problems accurately representing people of color with its photo technology. The blog post explains how a lack of testing on subjects with a variety of skin tones has caused the technology to make mistakes when photographing them, "like over-brightening or unnaturally desaturating skin."

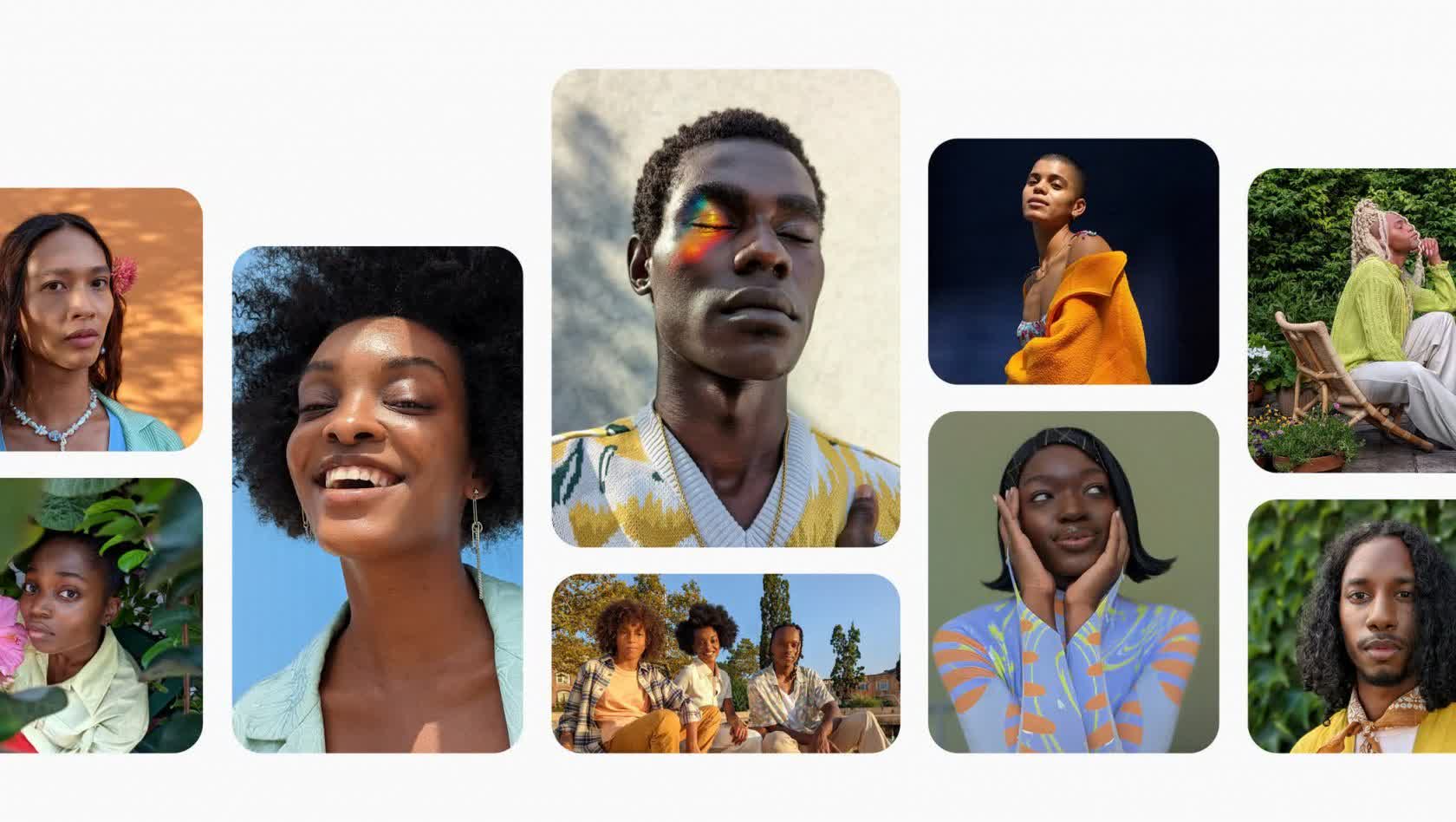

To start addressing this issue for Real Tone, Google worked with photographers known for depicting people of color. They include Kira Kelly, Deun Ivory, Adrienne Raquel, Kristian Mercado, Zuly Garcia, Shayan Asgharnia, Natacha Ikoli, and others. The datasets Google uses to train its camera models will start to include more portraits of people of color, and Real Tone will include several features to improve how the Pixel 6 camera detects skin.

Training the camera models on more diverse datasets should improve how the Pixel 6 camera detects faces in a variety of lighting situations. Auto-white balance on the Pixel 6 should produce more nuanced depictions of color. Auto-exposure should make sure pictures aren't automatically made unnecessarily light or dark. An algorithm should reduce the washed-out effect stray light can have on subjects with darker skin tones. The new Tensor processor's ability to manipulate blur should address blurriness that can sometimes occur with darker skin tones. Google says the experts it consulted also helped it improve the auto enhance feature in Google Photos. The updates to auto enhance should work across more skin tones and will come to both Android and iOS users.

Image technologies' bias with skin tones has been well documented. This has had implications for not only photography but also image sharing and facial recognition. In August, Twitter awarded a researcher whose study revealed that the service's image crop algorithm favored lighter, thinner faces. In June of last year, IBM decided to stop developing "general purpose" facial recognition technology due to worries regarding policing and racial injustice.