In brief: Meta has designed and is building what it believes will be the fastest AI supercomputer in the world. The AI Research SuperCluster (RSC) is already among the fastest AI supercomputers running, and is expected to be unrivaled when it is fully completed in mid-2022.

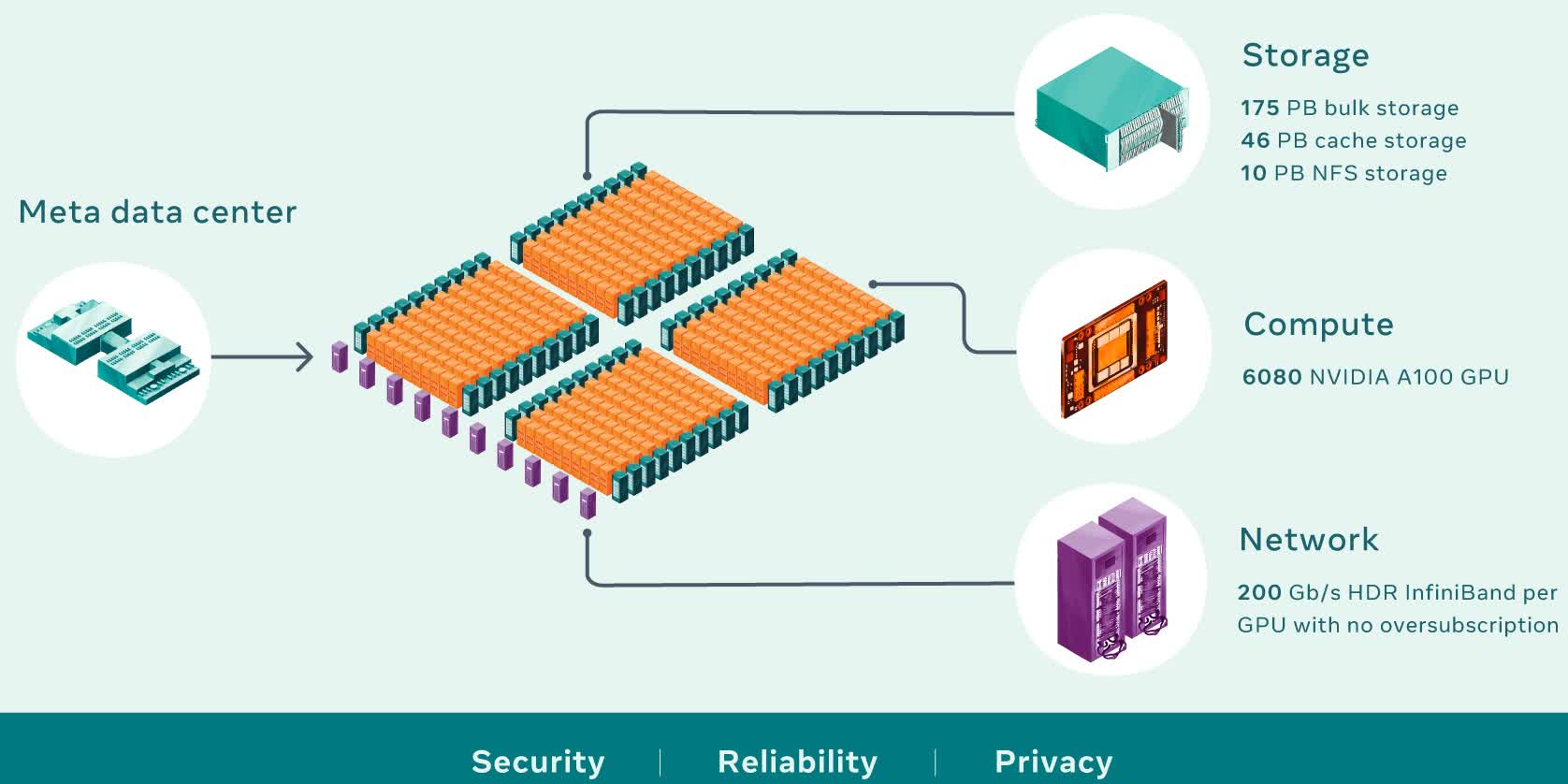

As it sits today, the system is comprised of 760 Nvidia DGX A100 systems (6,080 GPUs) linked using Nvidia Quantum 1600 Gb/s InfiniBand two-level Clos fabric that has no oversubscription.

Furthermore, the system has "175 petabytes of Pure Storage FlashArray, 46 petabytes of cache storage in Penguin Computing Altus systems, and 10 petabytes of Pure Storage FlashBlade." Early tests show the system is up to 20 times faster at computer vision workflows, nine times faster in the Nvidia Collective Communication Library (NCCL) and can train large-scale NLP models three times faster than Meta's legacy production and research infrastructure.

That's only the beginning. Once complete, Meta's supercomputer will connect 16,000 GPUs. They've also designed a caching and storage system that can serve 16 TB/s of training data, with plans to scale it up to one exabyte.

Meta said the work done with RSC will help it build technologies for the next major computing platform, the metaverse. It is here that Meta believes AI-driven applications and products will become even more important.

Indeed, if Meta's vision of the metaverse plays out as the company hopes, it's no doubt going to require a colossal amount of processing power. A supercomputer of this scale could go a long way in bringing the concept to reality.