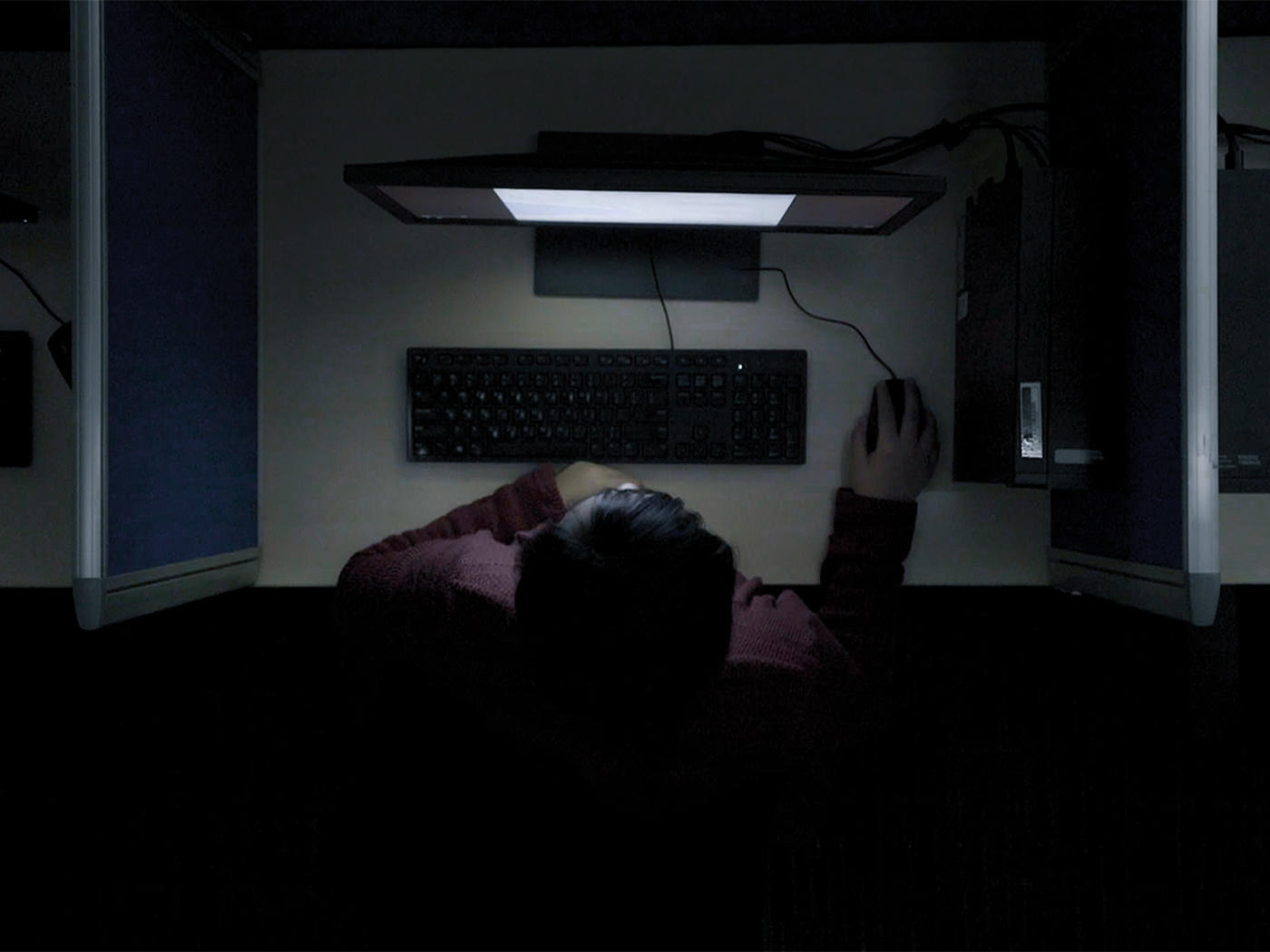

In brief: Not for the first time, former content moderators are suing TikTok over claims the company didn't do enough to support them as they watched extreme and graphic videos that included child sexual abuse, rape, torture, bestiality, beheadings, suicide, and murder.

Ashley Velez and Reece Young filed a class-action lawsuit against TikTok and parent company Bytedance, writes NPR. They worked through third-party contractors Telus International and New York-based Atrium.

The suit claims that TikTok and ByteDance violated California labor laws by failing to provide Velez and Young with adequate mental health support in a job that involved viewing "many acts of extreme and graphic violence." They also had to sit through hate speech and conspiracy theories that lawyers say had a negative impact on their mental well-being.

"We would see death and graphic, graphic pornography. I would see nude underage children every day," Velez said. "I would see people get shot in the face, and another video of a kid getting beaten made me cry for two hours straight."

The plaintiffs say they were allowed just two 15-minute breaks in their 12-hour workday and had to review videos for no longer than 25 seconds before deciding with more than 80% accuracy whether the content broke TikTok's rules. Moderators would often watch more than one video at once to meet quotas, the suit says, accusing TikTok of imposing high "productivity standards" on moderators.

Both plaintiffs say they had to pay for counseling out of their own money to deal with the psychological impact of the job. They also had to sign non-disclosure agreements that prevented them from discussing their work details.

The suit claims that TikTok and ByteDance made no effort to provide "appropriate ameliorative measures" to help workers deal with the extreme content they were exposed to.

In December, another TikTok moderator launched a similar class-action lawsuit against the company and Bytedance, but the case was dropped last month after the plaintiff was fired, writes NPR.

In 2018, a content moderator for Facebook contractor Pro Unlimited sued the social network after the "constant and unmitigated exposure to highly toxic and extremely disturbing images at the workplace" resulted in PTSD. Facebook settled the case for $52 million. There was also a YouTube mod who sued the Google-owned firm in 2020 after developing symptoms of PTSD and depression, a result of reviewing thousands of disturbing videos.