A hot potato: YouTube's algorithm for recommended content has come under fire again, this time over claims that it is recommending hundreds of videos about guns and gun violence to young kids interested in video games. Some of the clips included scenes of school shootings and instructions on how to use and modify weapons.

A study by the nonprofit watchdog the Tech Transparency Project (TTP) discovered the concerning recommendations by creating four test accounts – two identified as nine-year-old boys and two identified as 14-year-old boys.

The researchers controlling the accounts watched only playlists composed entirely of video game content. The nine-year-old accounts watched videos from titles such as Roblox, Lego Star Wars, and Five Nights at Freddy's. At the same time, the 14-year-old watched mostly Grand Theft Auto, Halo, and Red Dead Redemption.

The researchers then logged the videos recommended to the accounts on the YouTube home page and Up Next panel during a 30-day period last November. "The study found that YouTube pushed content on shootings and weapons to all of the gamer accounts, but at a much higher volume to the users who clicked on the YouTube-recommended videos," wrote the TTP.

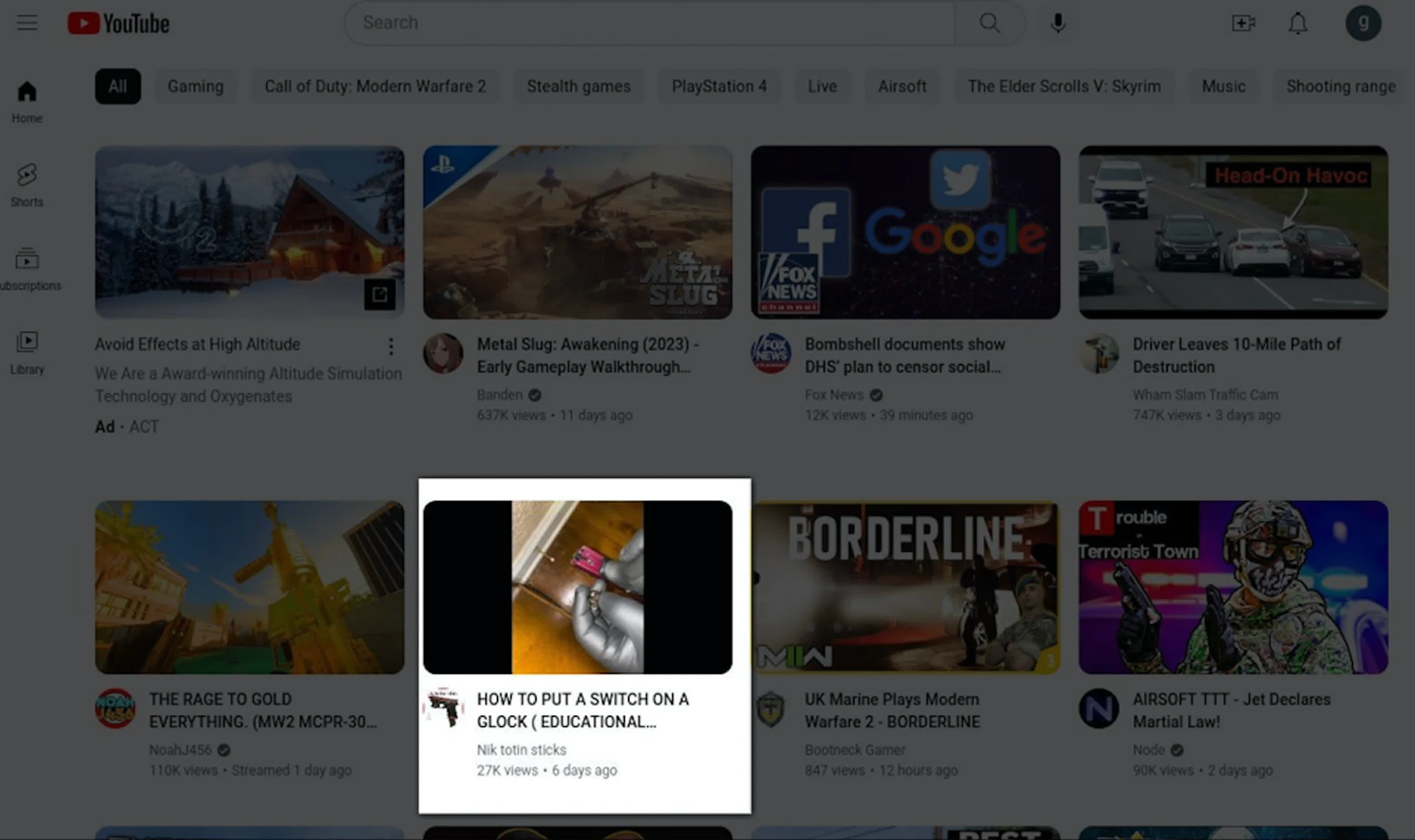

Some of the recommended videos included mass shooting events, demos of how much damage a gun can inflict on a body, and how-to guides for converting handguns into fully automatic weapons - many of them violate YouTube's own content policies.

During the month-long testing period, YouTube pushed 382 real firearms videos to the nine-year-olds' accounts and 1,325 real firearms videos to the 14-year-olds' accounts.

YouTube has previously talked about delivering responsible recommendations and not steering viewers to extreme content, though the TTP disputes those claims with its findings. The watchdog notes that the way YouTube leads young gamers to videos of shootings and weapons could undo the work done to disprove links between video games and real-world gun violence.

Google has been trying to improve YouTube's recommended video algorithm for years, but it still offers up borderline, inappropriate, and sometimes offensive content. It can be especially problematic when the site is the most popular among teens; one in five say they are on it almost constantly.

In a statement to Engadget, a YouTube spokesperson said YouTube Kids and the main site's in-app supervision tools were creating a "safer experience for tweens and teens" on its platform.

"We welcome research on our recommendations, and we're exploring more ways to bring in academic researchers to study our systems," the spokesperson added. "But in reviewing this report's methodology, it's difficult for us to draw strong conclusions. For example, the study doesn't provide context of how many overall videos were recommended to the test accounts, and also doesn't give insight into how the test accounts were set up, including whether YouTube's Supervised Experiences tools were applied."