Following up to our last mega benchmark involving the GeForce RTX 4070 Ti, today we'll be comparing the RTX 40 series GPU against the GeForce RTX 3080 10GB, a previous generation GPU which is now two and a half years old. Of course, we'll be doing so in 50 games, with some titles tested using multiple configurations, with ray tracing and upscaling enabled in some cases.

You might be wondering why this comparison is relevant, considering the RTX 4070 Ti and RTX 3080 are "meant to be" on two different GPU classes. But the thing is, that's just a name and companies like Nvidia and AMD commonly use their established product families to upsell gamers, and that's exactly what's going on here.

When the GeForce RTX 3080 launched at $700, right before the crypto boom, getting one for that price was near impossible. Later on, Nvidia decided to take advantage of the market situation by re-releasing GA102 and GA104 chips with minor spec bumps, asking $1,200 for the RTX 3080 Ti and a more reasonable $600 for the RTX 3070 Ti.

Still, many gamers did purchase a 10GB GeForce RTX 3080, and many are probably considering the upgrade to an RTX 40 series product, but of course, the problem is that pricing... it's gone up, by a lot! The GeForce RTX 4070 Ti starts at $800 (MSRP), with most models going for $850 or more at retail. Worse still, if you want the more desirable RTX 4080, those cost $1,200 – they are supposed to cost that, but in reality they are closer to $1,300 – ouch.

Another possible situation is that you didn't buy any GPUs during the crypto boom – smart! – and now you are ready to buy a new graphics card. The GeForce RTX 4070 Ti is pretty expensive at $800+ and while the RTX 3080 is no longer selling new, it may be attractive to buy second hand if you can find it for around $500-$550. Depending on what you own right now, this may still be considerably faster and a nice upgrade.

So, compared to the RTX 3080 10GB, does the GeForce RTX 4070 Ti have much to offer? Comparing some specs, the RTX 4070 Ti is 14% more expensive than the RTX 3080 was at launch, the die itself is 53% smaller though it manages to pack 27% more transistors thanks to the upgrade to the TSMC N4 process, a significant step up from Samsung's 8LPP.

The GeForce 4070 Ti packs 8 times more L2 cache going from a mere 6 MB to 48 MB. The core configuration sees less CUDA cores, but IPC has been improved. The RTX 4070 Ti includes an extra 2GB of VRAM, taking the total to 12GB, but memory bandwidth has been significantly reduced due to a more limited 192-bit wide bus. That's a massive downgrade from the 320-bit wide bus on the RTX 3080, which when paired with 19 Gbps memory resulted in a bandwidth of 760 GB/s.

The RTX 4070 Ti uses faster 21 Gbps GDDR6X memory, but the narrower bus sees bandwidth cut to just 504 GB/s, a big 34% reduction. In memory sensitive scenarios, the RTX 4070 Ti will likely suffer, and that's something we're going to be looking at in our benchmarks. Ultimately, we want to see how the 3080 and 4070 Ti compare across a wide range of games, so let's go do that.

For testing, our system was powered by the Ryzen 9 7950X3D, but with the second CCD disabled to ensure max performance – essentially we're turning the CPU in the yet to be released 7800X3D. The Gigabyte X670E Aorus Master motherboard was used with 32GB of DDR5-6000 CL30 memory and the display drivers. As usual, we'll go over the some data highlights for about a dozen games before jumping into the big breakdown graphs. The resolutions of interest are 1440p and 4K.

Benchmarks

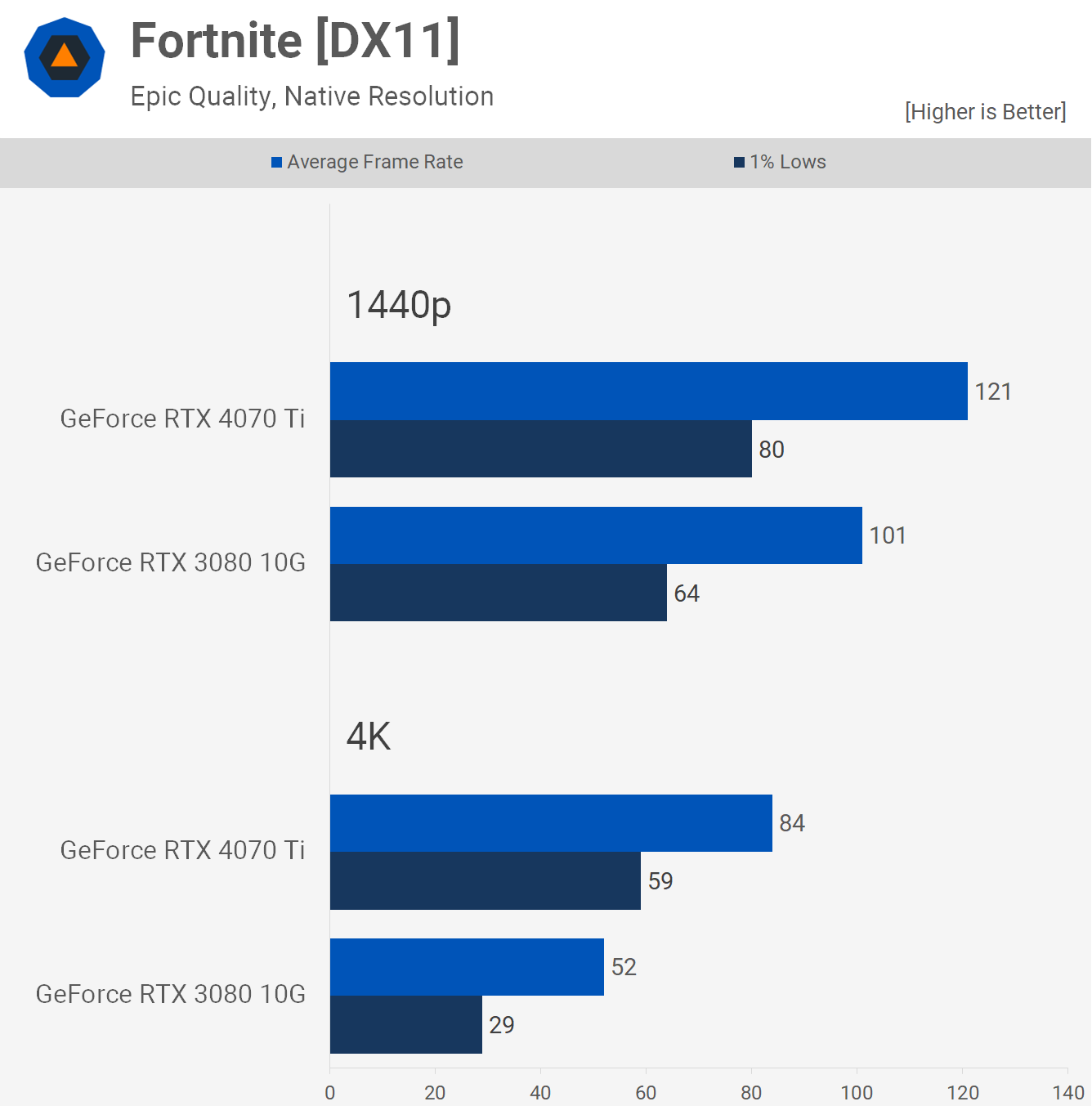

Starting with Fortnite using DX11 with the Epic quality settings without any upscaling, we find that the GeForce RTX 4070 Ti is 20% faster than the RTX 3080 at 1440p, which is not a big difference and the performance uplift is inline with the price increase (MSRP at launch).

However, at 4K we see a far more substantial 62% increase for the newer RTX 4070 Ti, going from 52 fps up to a much more desirable 84 fps. Basically without upscaling the RTX 3080's performance is very janky at 4K with 1% lows of 29 fps, making the RTX 4070 Ti a much more capable 4K gamer in this game.

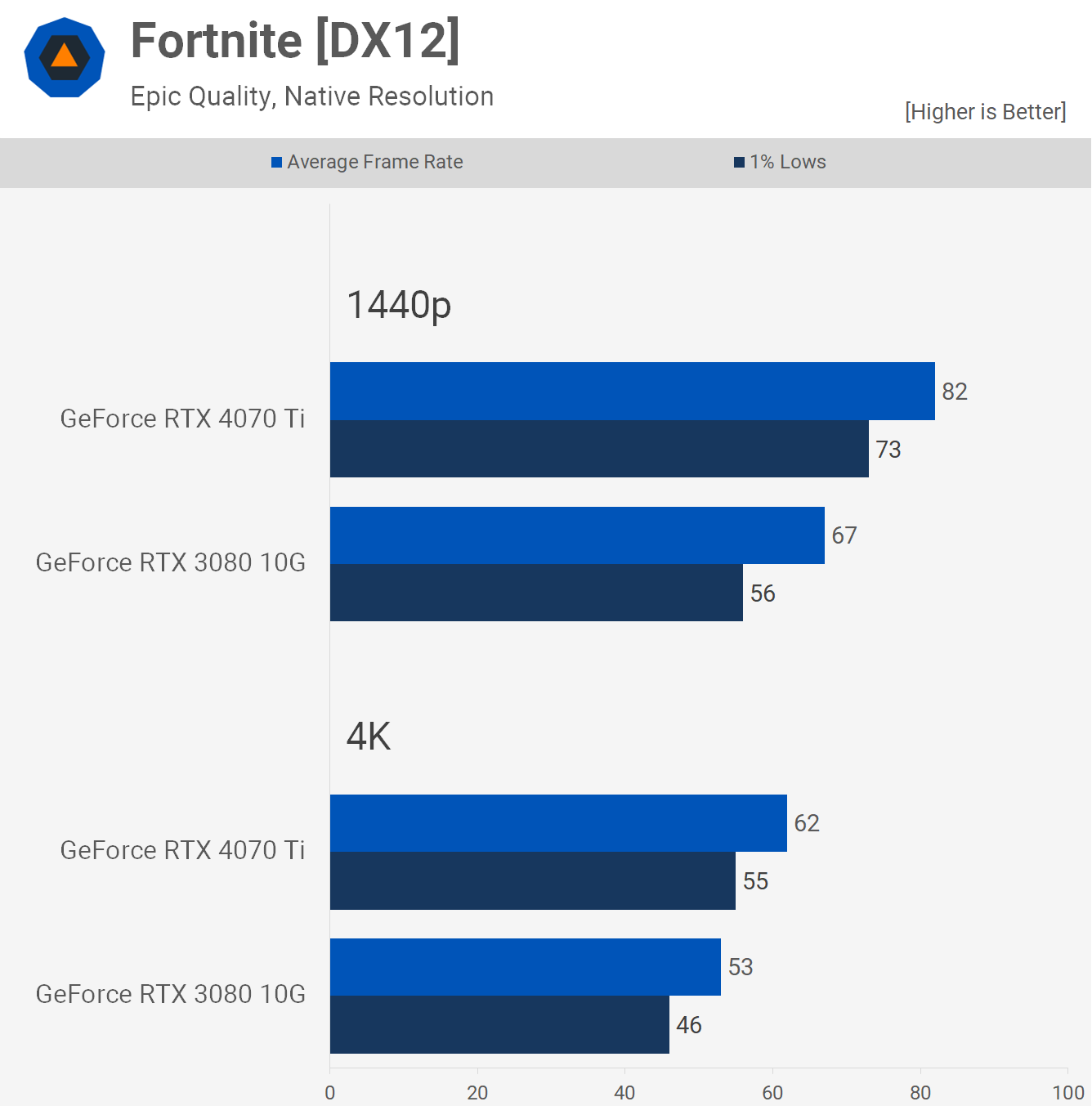

Now, this is interesting. Typically we've found Fortnite to run slower using DX12, but have noted that with slower CPUs this might not be the case, but it appears as though this also applies to slower GPUs as well...

At 1440p, the RTX 4070 Ti dropped 32% of its original performance with DX11, whereas the RTX 3080 dropped by 34%. Then at 4K, the 4070 Ti ran 26% slower with DX12 and the 3080 was actually 2% faster – with a massive 59% improvement to 1% lows. The lower overhead of DX12 is helping improve minimum frame rates, but it's capping overall performance, at least in this example.

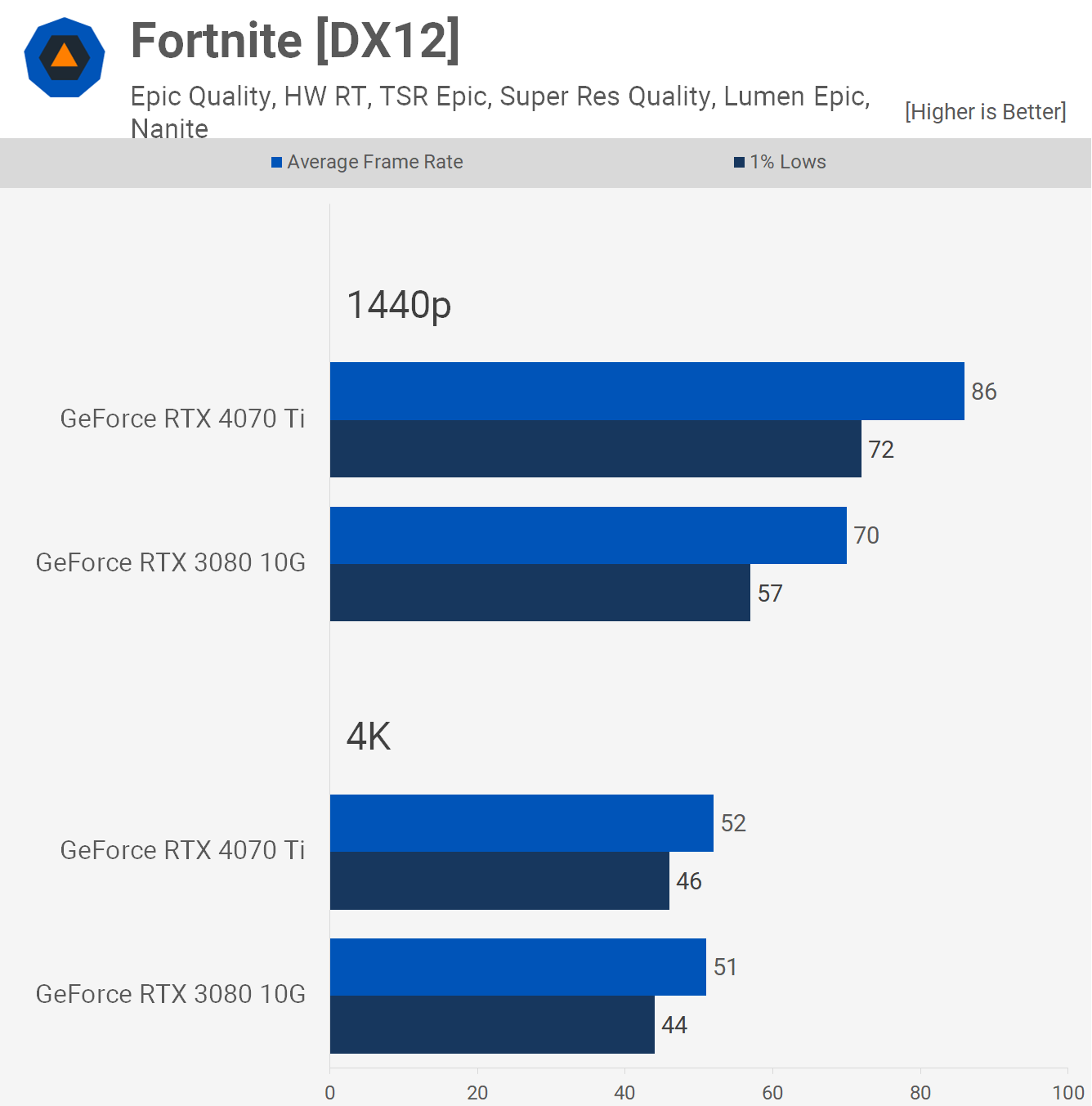

The problem this DX12 limitation has on high-end hardware is amplified when enabling hardware ray tracing, though here we run into more odd data. The RTX 4070 Ti is 23% faster than the 3080 at 1440p, which is about what you'd expect to see based on previous data. However, it's the 4K results that are surprising: the 3080 and 4070 Ti delivered similar performance. Whereas the 4070 Ti saw a 16% reduction in performance when compared to the DX12 results with RT disabled, the 3080 saw just a 4% decline.

Remember that the regular results without ray tracing did not use upscaling, so perhaps this is a result of setting the super resolution to quality mode for the ray tracing test. Whatever the case, the RTX 3080 was surprisingly impressive here.

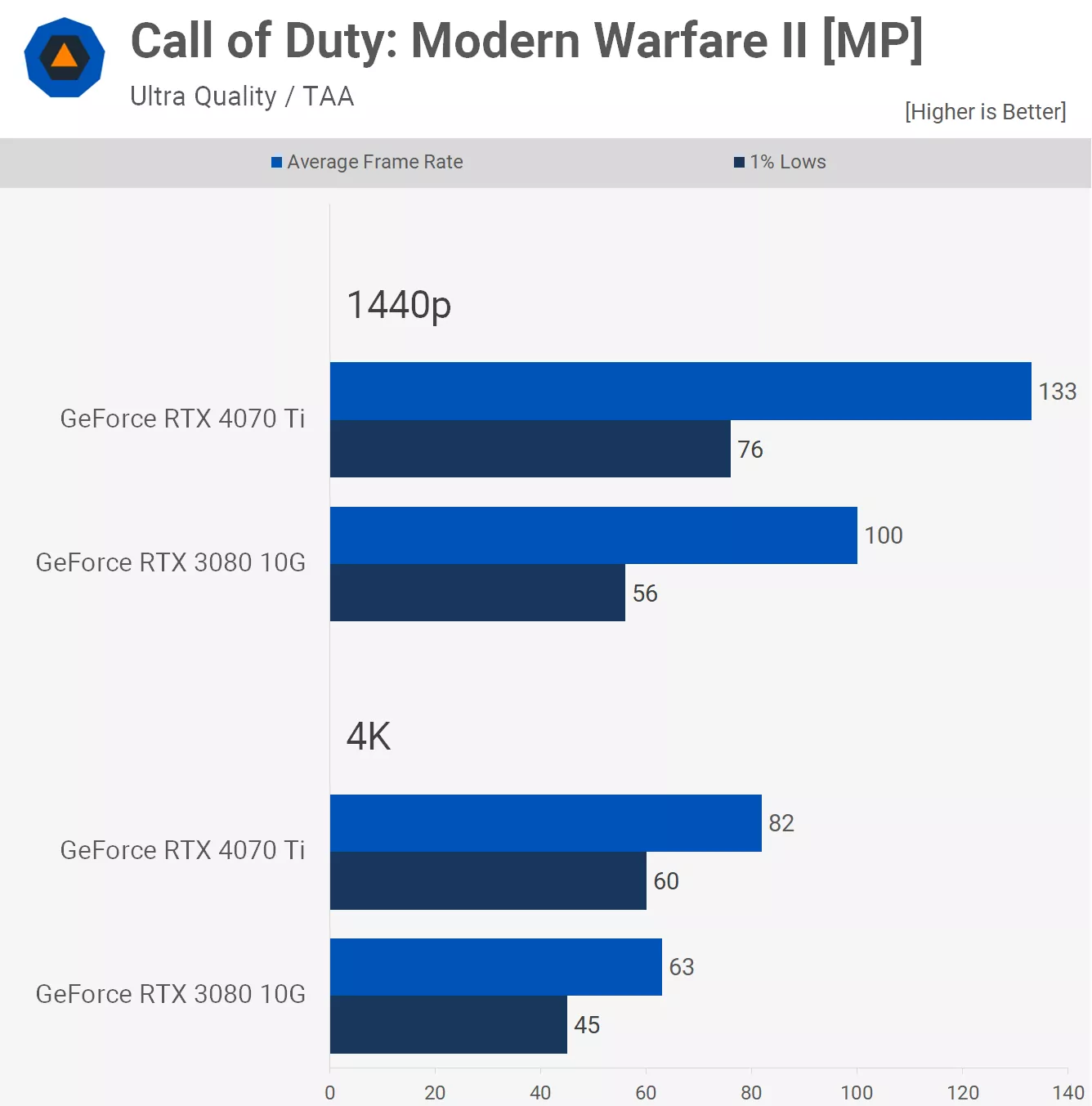

Next up we have Call of Duty: Modern Warfare II, where the RTX 4070 Ti is 33% faster than the 3080 at 1440p and 30% faster at 4K. Those are strong gains there from the newer, but also more expensive Ada Lovelace GPU.

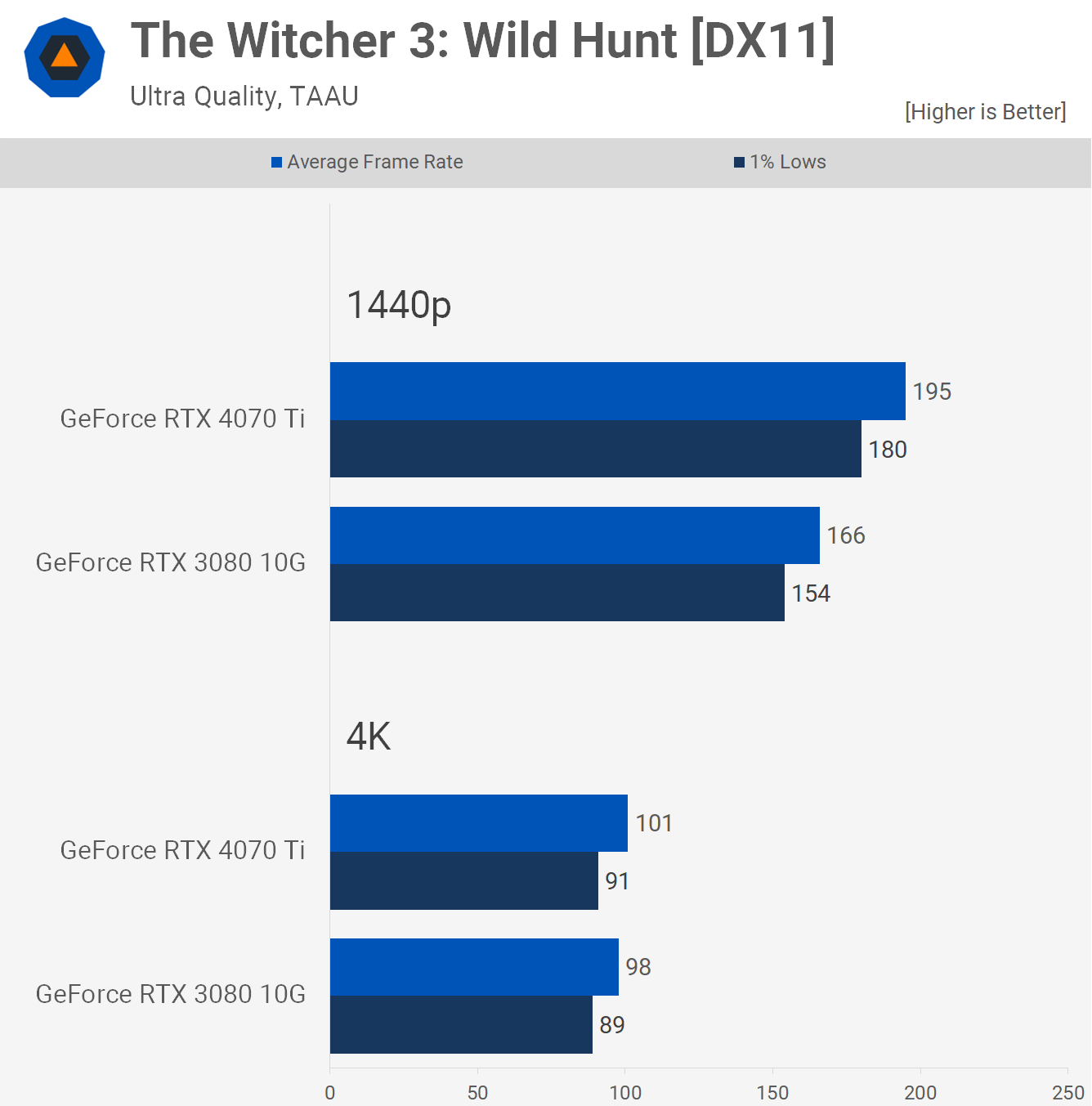

Here we have another example, this time in The Witcher 3: Wild Hunt, where the 4070 Ti beats the 3080 by a fairly comfortable margin at 1440p (17% here), only to match it at the more demanding 4K resolution. The reason for this is almost certainly down to the difference in memory bandwidth, a factor that plays a key role as the resolution increases.

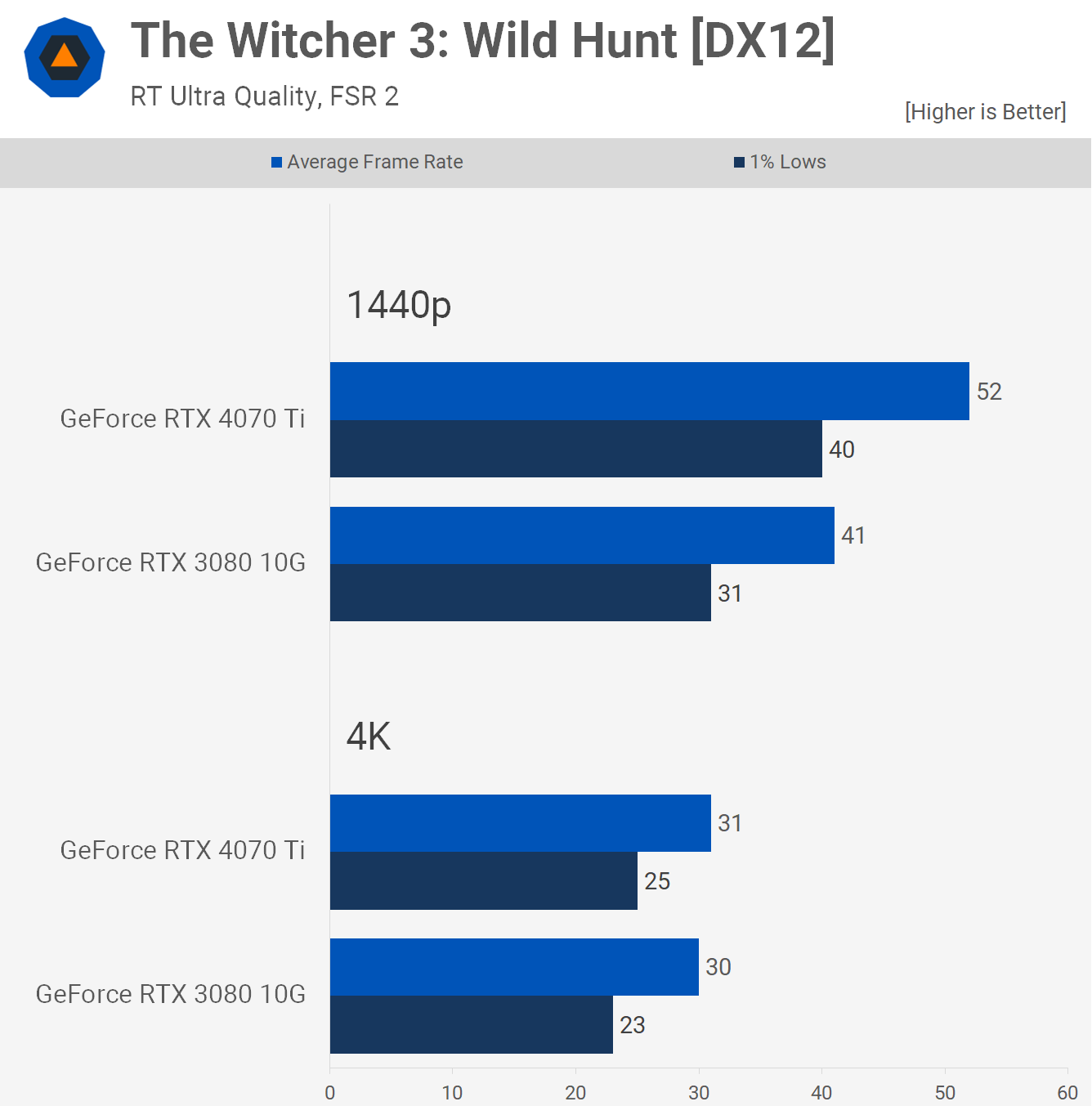

We see a similar trend with ray tracing enabled, along with FSR 2. The RTX 4070 Ti was 27% faster than the RTX 3080 at 1440p, but despite that massive win it was just 3% faster at 4K.

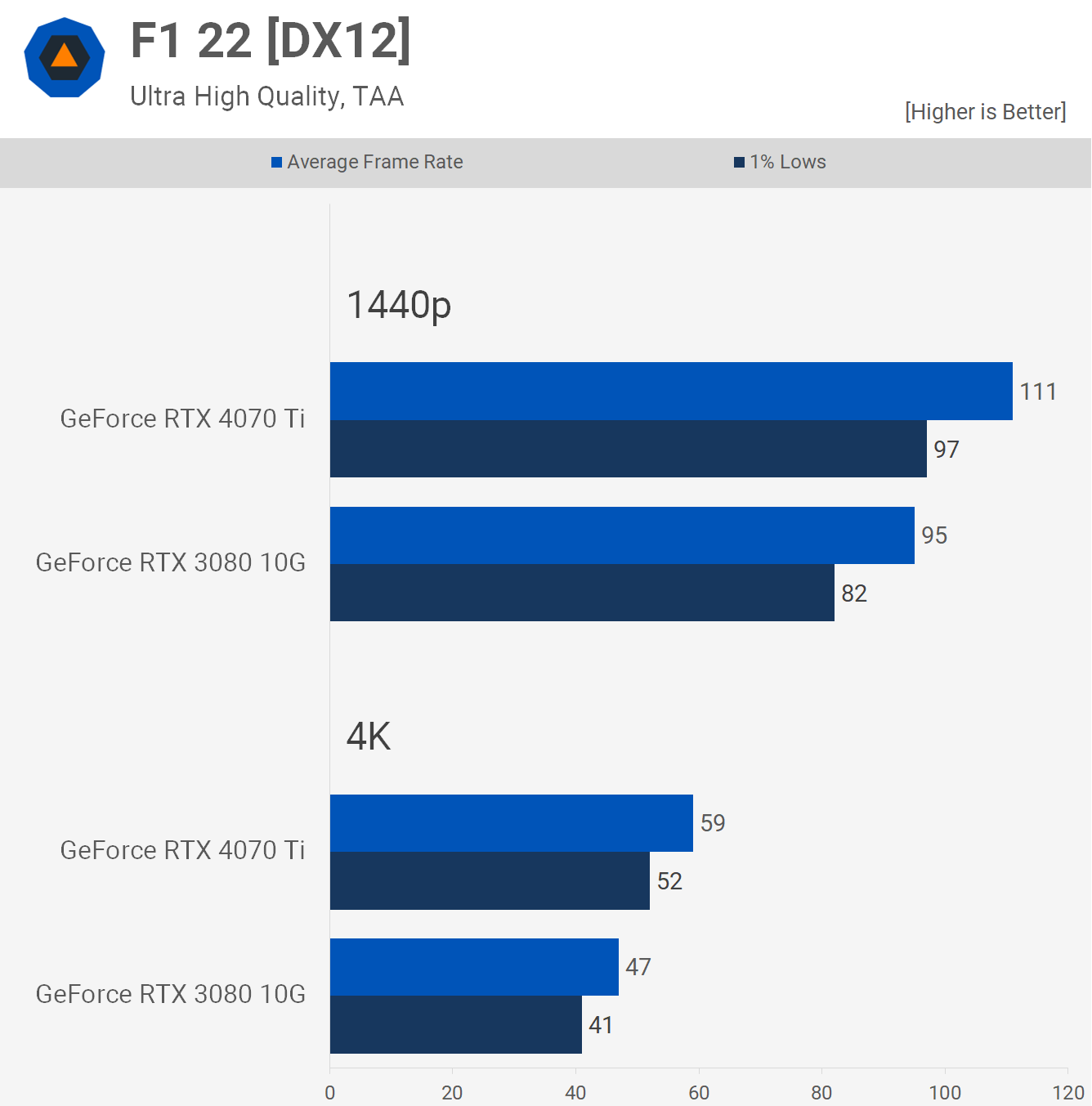

The F1 22 results are more what you'd typically expect to see with the RTX 4070 Ti delivering 17% more frames at 1440p and 26% more at 4K. Clearly this game isn't heavy on memory, so the more limited bandwidth of the 4070 Ti is not an issue.

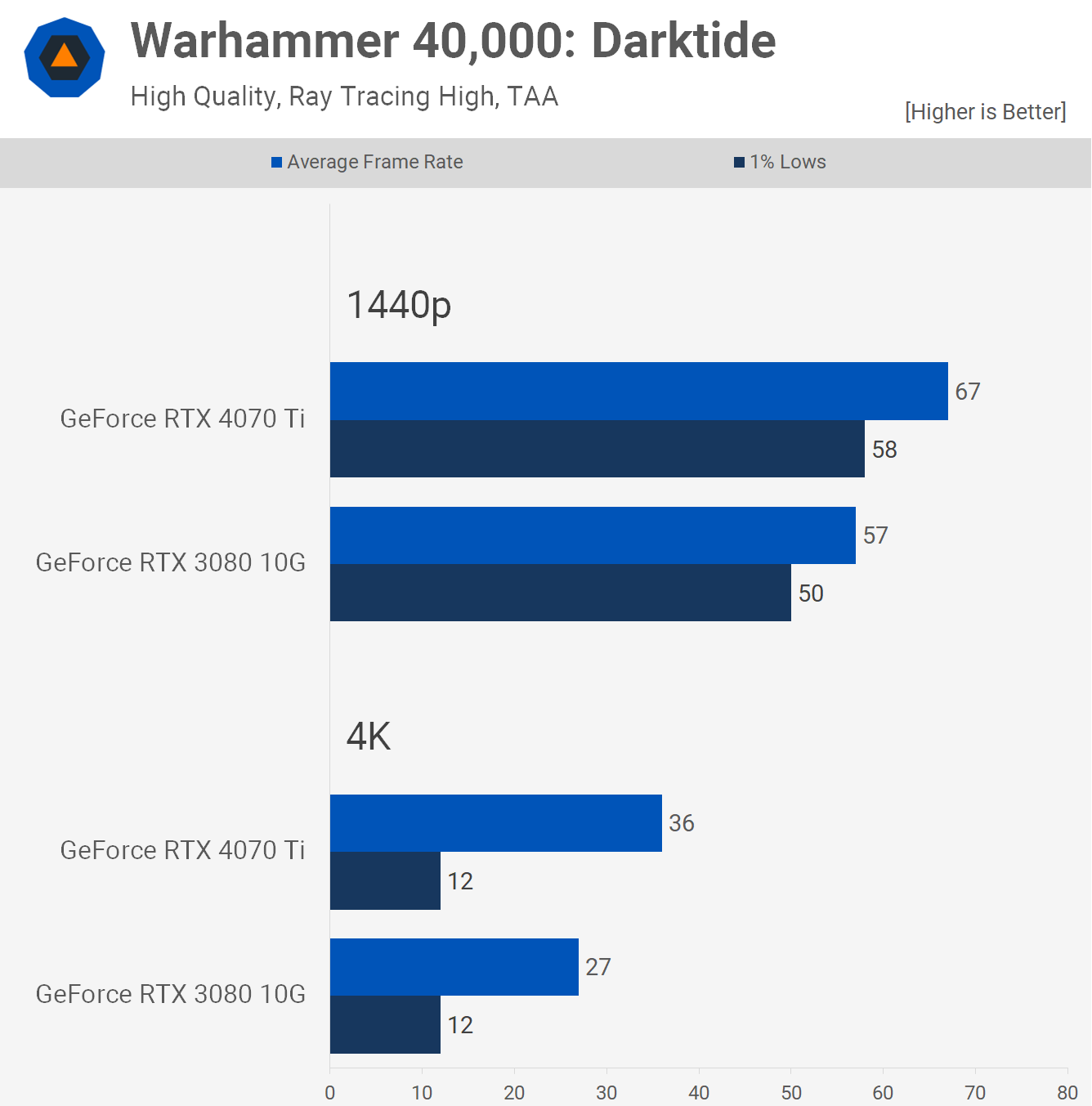

Warhammer 40,000: Darktide was tested using the highest quality preset with ray tracing enabled and this saw the RTX 3080 dip below 60 fps at 1440p, making the 4070 Ti 18% faster.

Then at 4K, the average frame rate of the 4070 Ti was 33% greater, but both suffered from stuttering issues with 1% lows of just 12 fps.

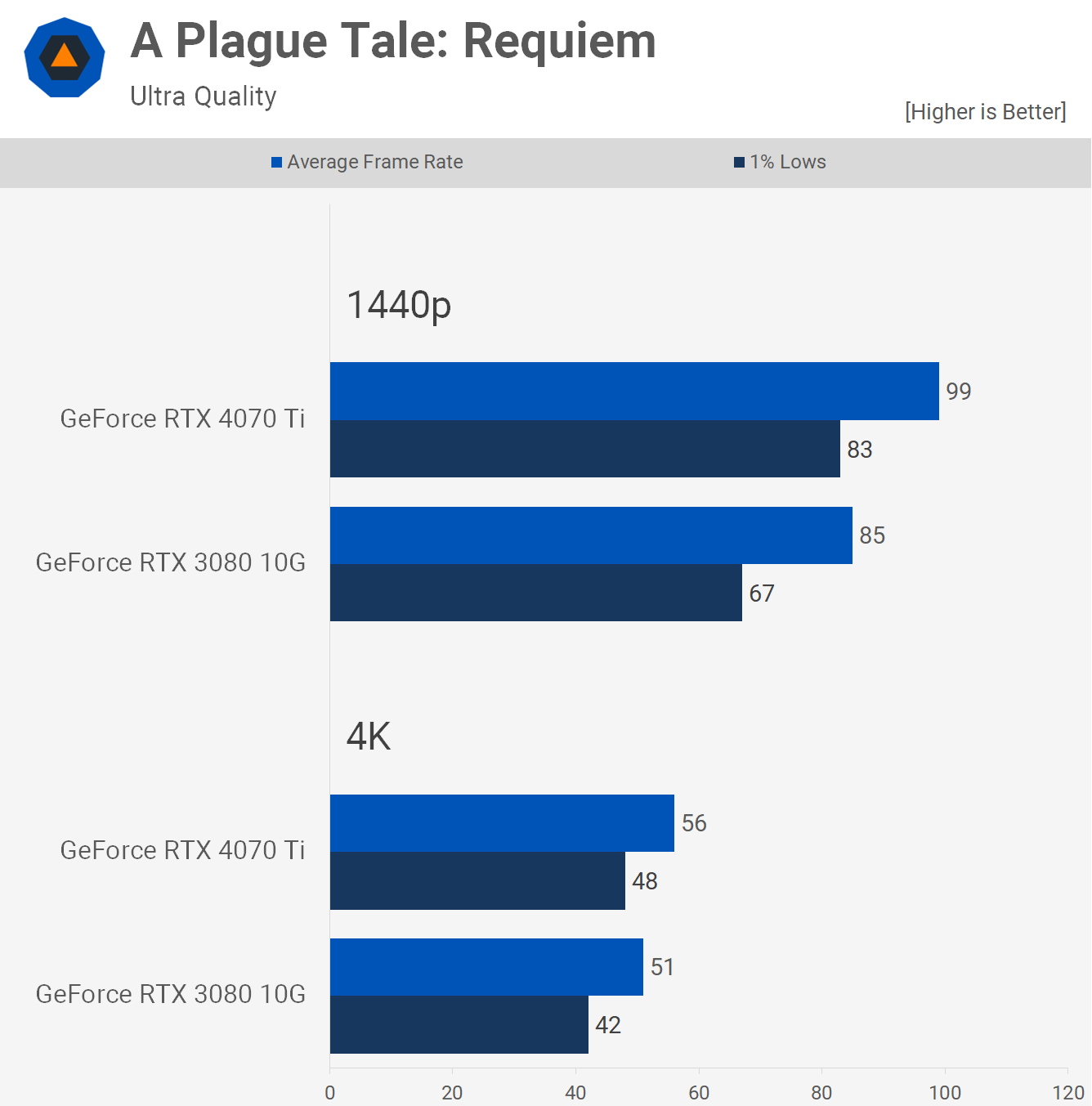

The results for A Plague Tale: Requiem favored the 4070 Ti at both tested resolutions, a 16% performance advantage at 1440p and 10% at 4K, suggesting that performance starts to become more memory bandwidth bound on the 4070 Ti as the resolution increases.

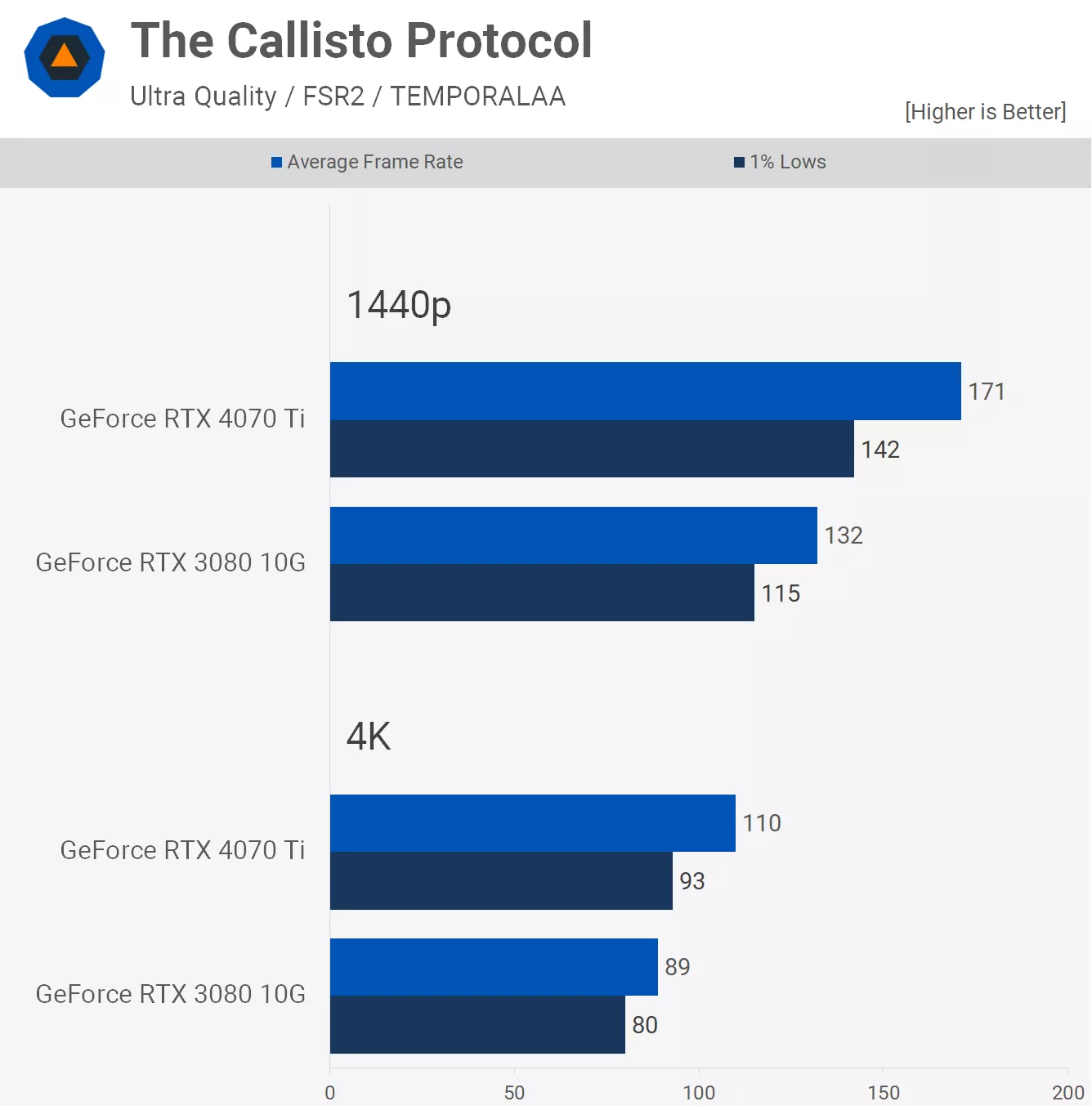

Next up we have The Callisto Protocol and here the 4070 Ti is 30% faster than the RTX 3080 at 1440p, while that margin is reduced to 24% at 4K. The game does use FSR 2 with the ultra quality preset, that's the default configuration, so we haven't altered it – needless to say, frame rates would be lower at the native resolution.

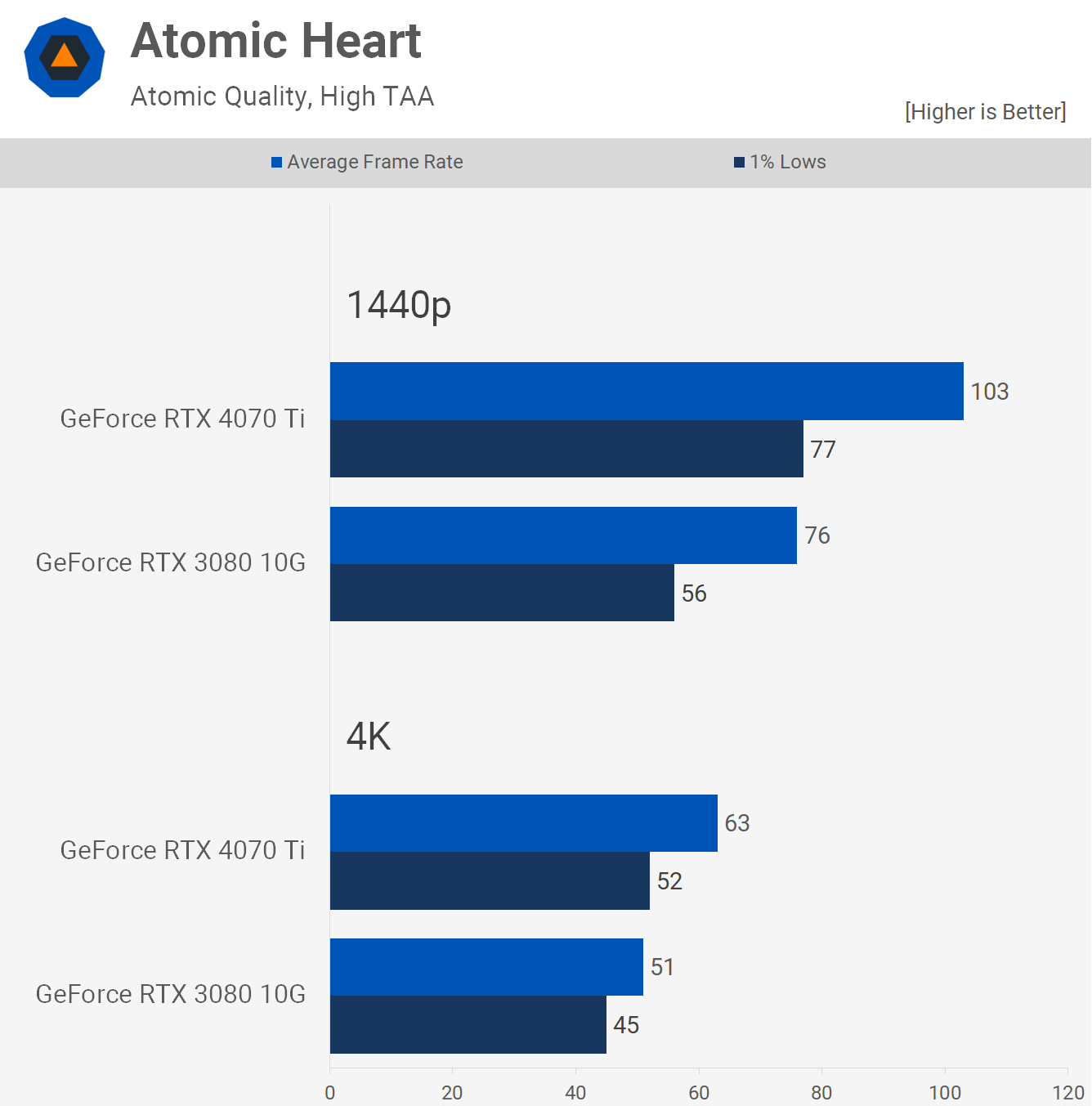

Atomic Heart is another game where the RTX 3080 fares much better relative to the RTX 4070 Ti as the resolution increases. For example, at 1440p the RTX 4070 Ti is 36% faster than the 3080, which is a massive margin, and although it's still 24% faster at 4K, that's a significant reduction compared to the 1440p results.

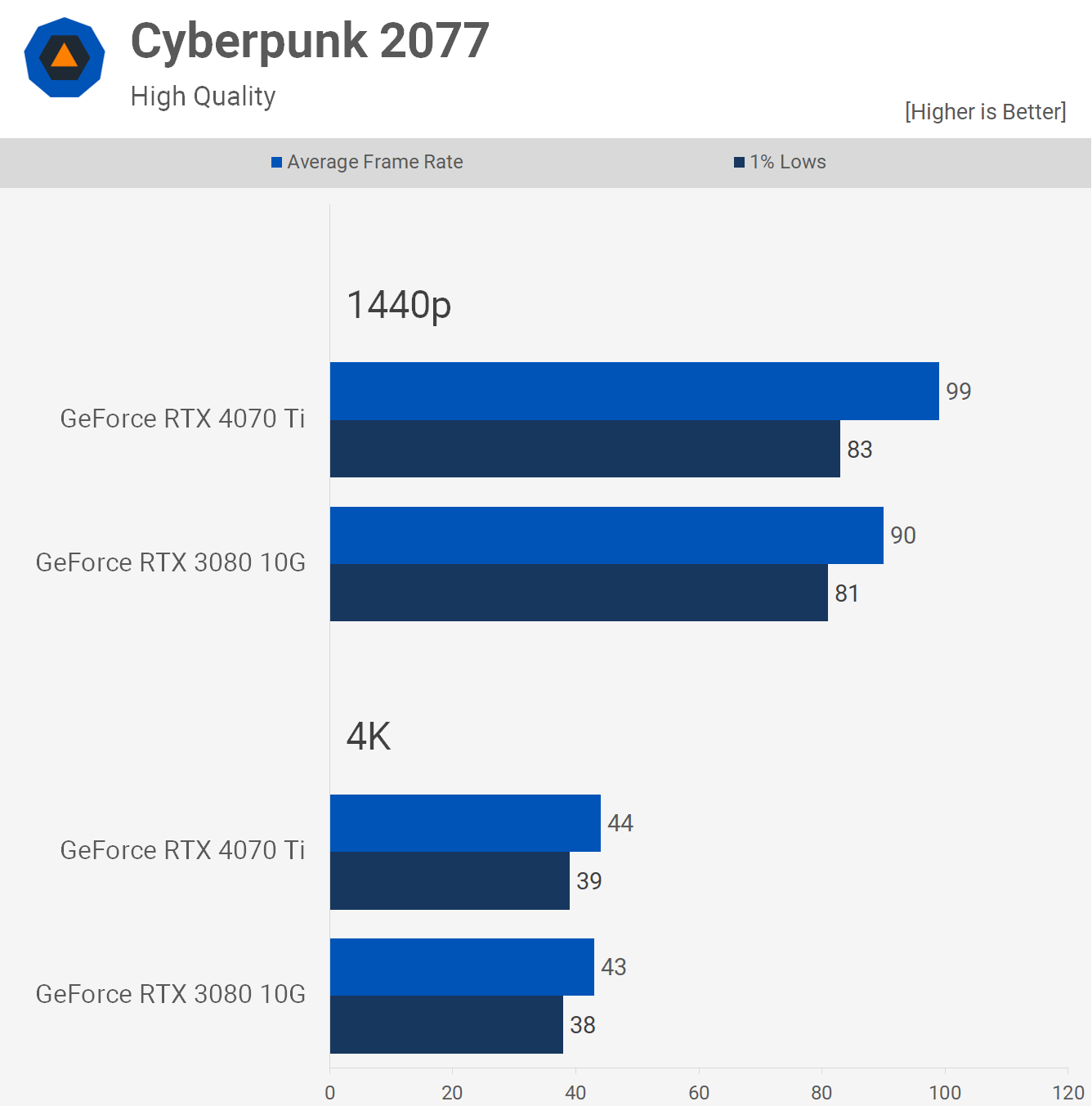

Interestingly, performance in Cyberpunk 2077 is much more competitive, even with ray tracing disabled. Using high quality settings, the RTX 4070 Ti was just 10% faster at 1440p with comparable 1% lows. Then at 4K performance was much the same, 43 fps vs 44 fps.

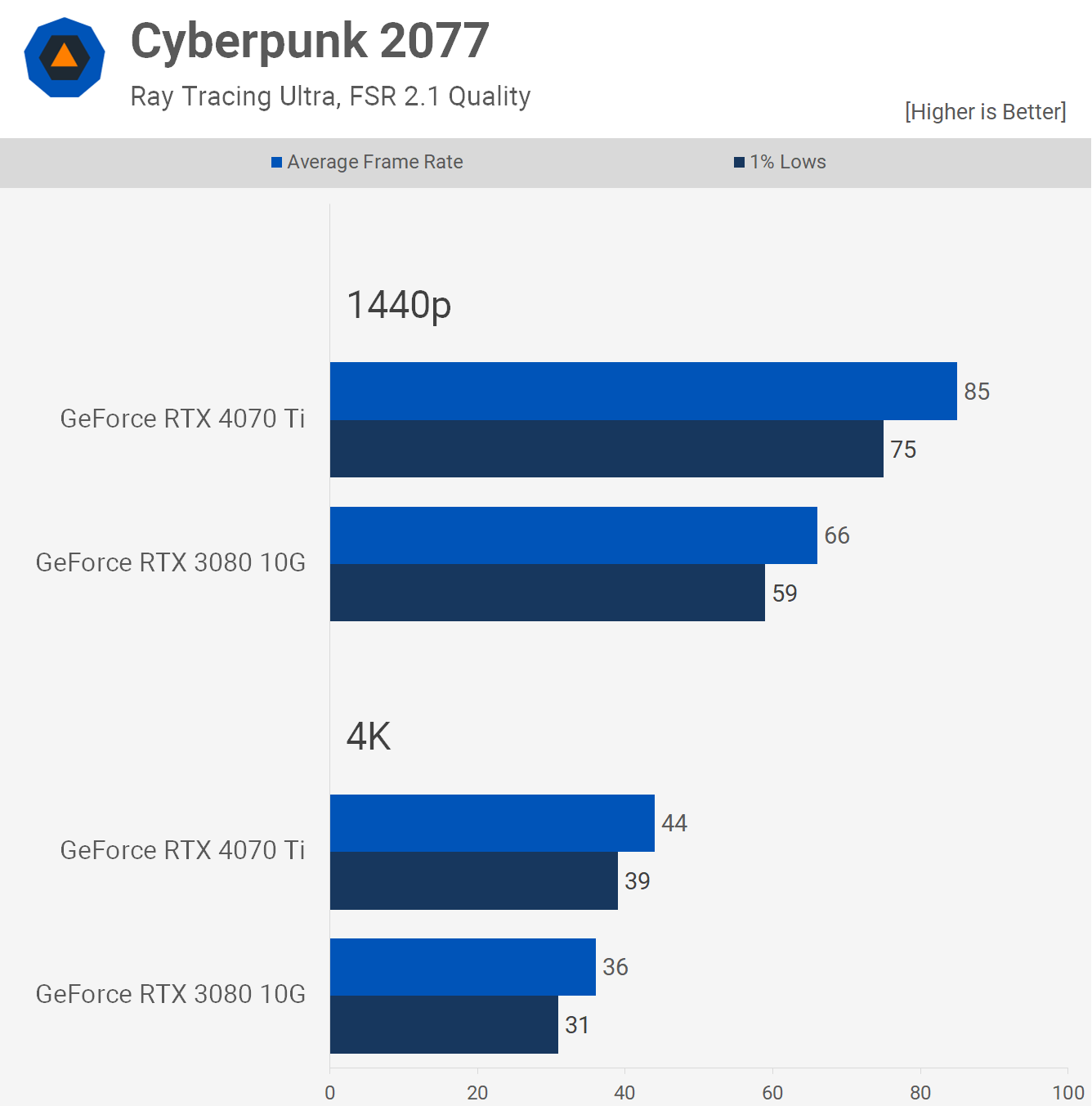

Given what we saw in Cyberpunk with RT effects disabled, these results might be a little surprising, though Ada Lovelace is meant to be more efficient when it comes to RT performance. That's showcased here as the RTX 4070 Ti is now 29% faster than the RTX 3080 at 1440p and 22% faster at 4K, and those are solid performance gains.

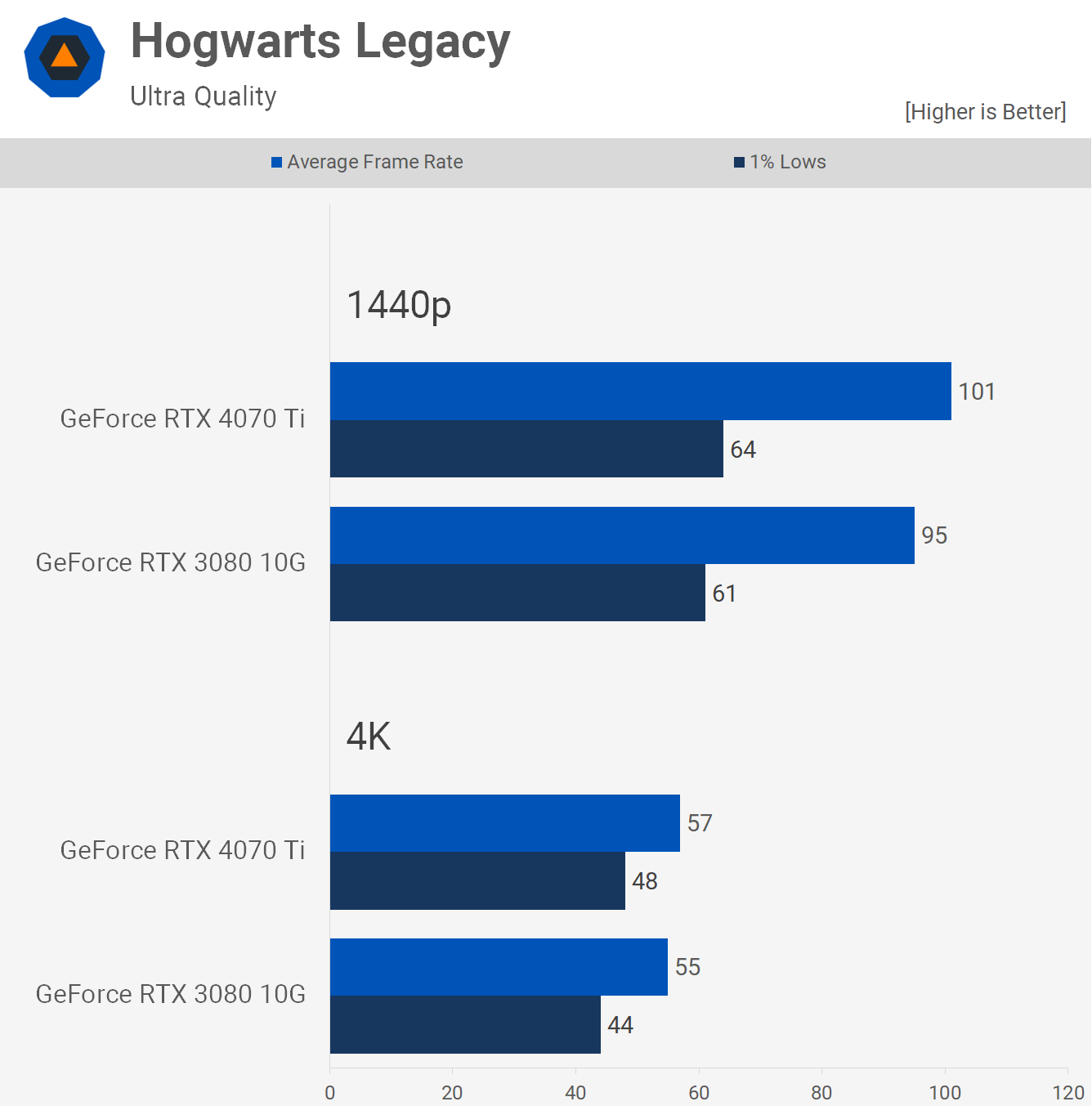

Like Cyberpunk 2077, we've tested Hogwarts Legacy with and without ray tracing, and we'll start with the standard ultra results. Here the RTX 3080 and 4070 Ti are surprisingly similar at both tested resolutions, the new Ada Lovelace GPU was just 6% faster at 1440p and a mere 4% faster at 4K.

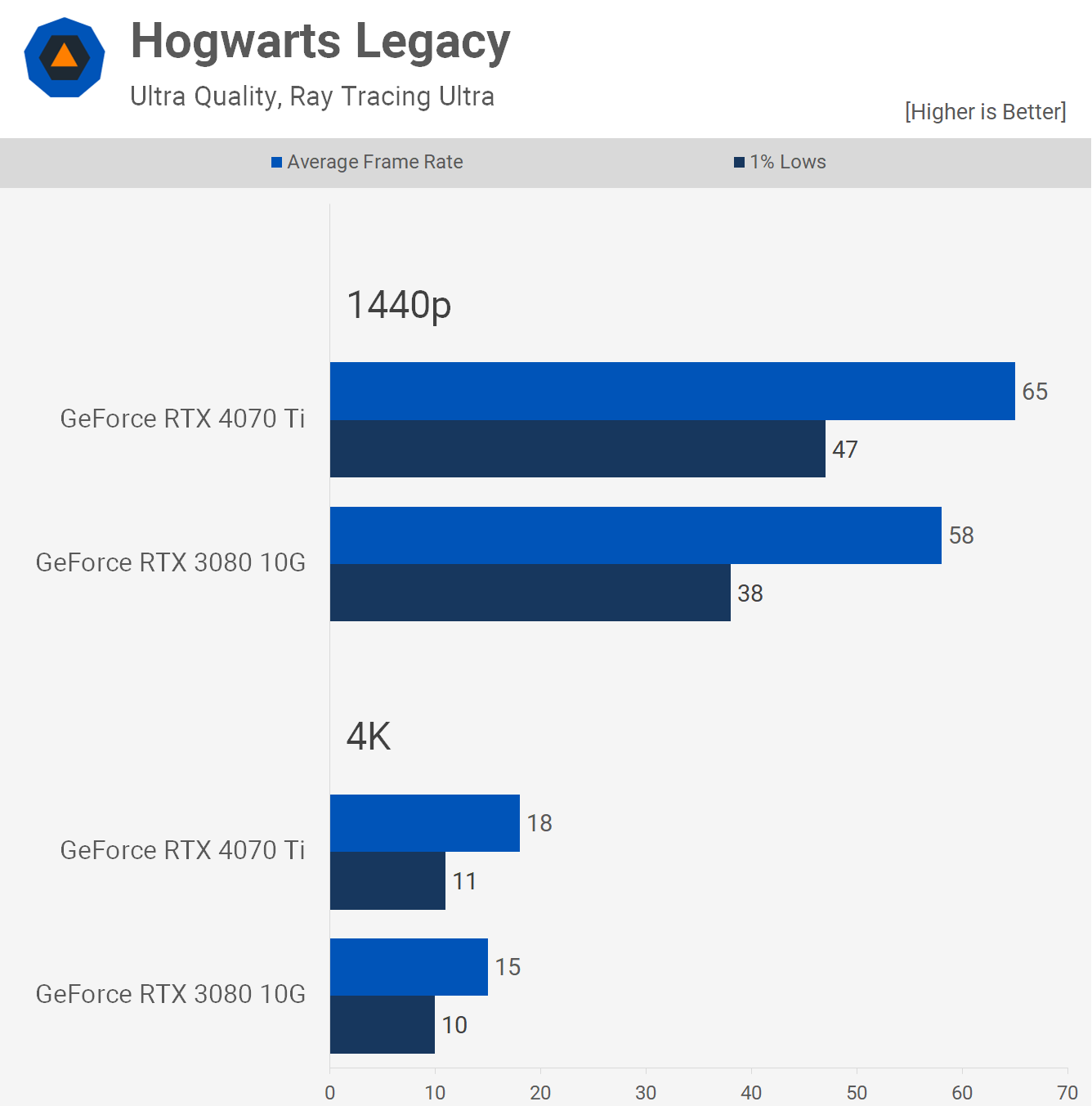

Now with ray tracing enabled, the RTX 4070 Ti extended its lead out to 12% at 1440p, rendering 65 fps on average. However, 4K is too much for even a 12GB VRAM buffer, so under these conditions both models were a stuttery mess in our test.

The latest Hogwarts Legacy patch hasn't addressed this issue, if having high quality textures with the added load of ray tracing is an issue. In any case, the RTX 3080 suffers visually from a lot of compressed textures and significant pop-in, but it does work at 1440p, at least in our testing.

Performance Summary

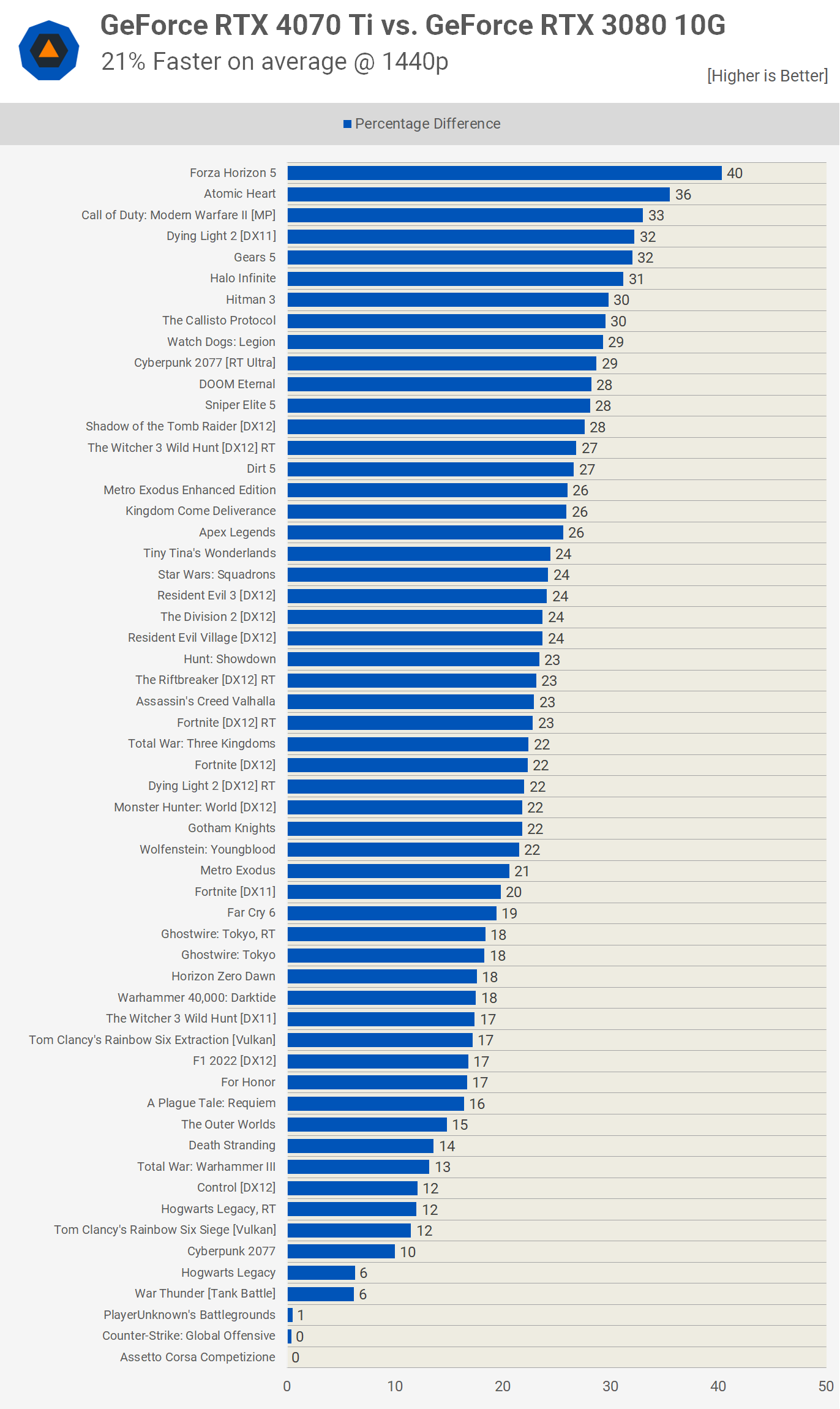

Now it's time to compare the RTX 4070 Ti and RTX 3080 across all the 50 games tested.

The GeForce RTX 4070 Ti is on average 21% faster than the RTX 3080 10GB at 1440p, a significant uplift and what would be an amazing result if the RTX 4070 Ti didn't cost considerably more, too.

The biggest wins for the RTX 4070 Ti came in Forza Horizon 5, Atomic Heart, Modern Warfare II, Dying Light 2 without ray tracing, Gears 5, Halo Infinite, Hitman 3 and The Callisto Protocol.

The smallest wins were mostly seen when CPU limited in titles such as ACC, CSGO, PUBG, War Thunder and Hogwarts Legacy with RT disabled.

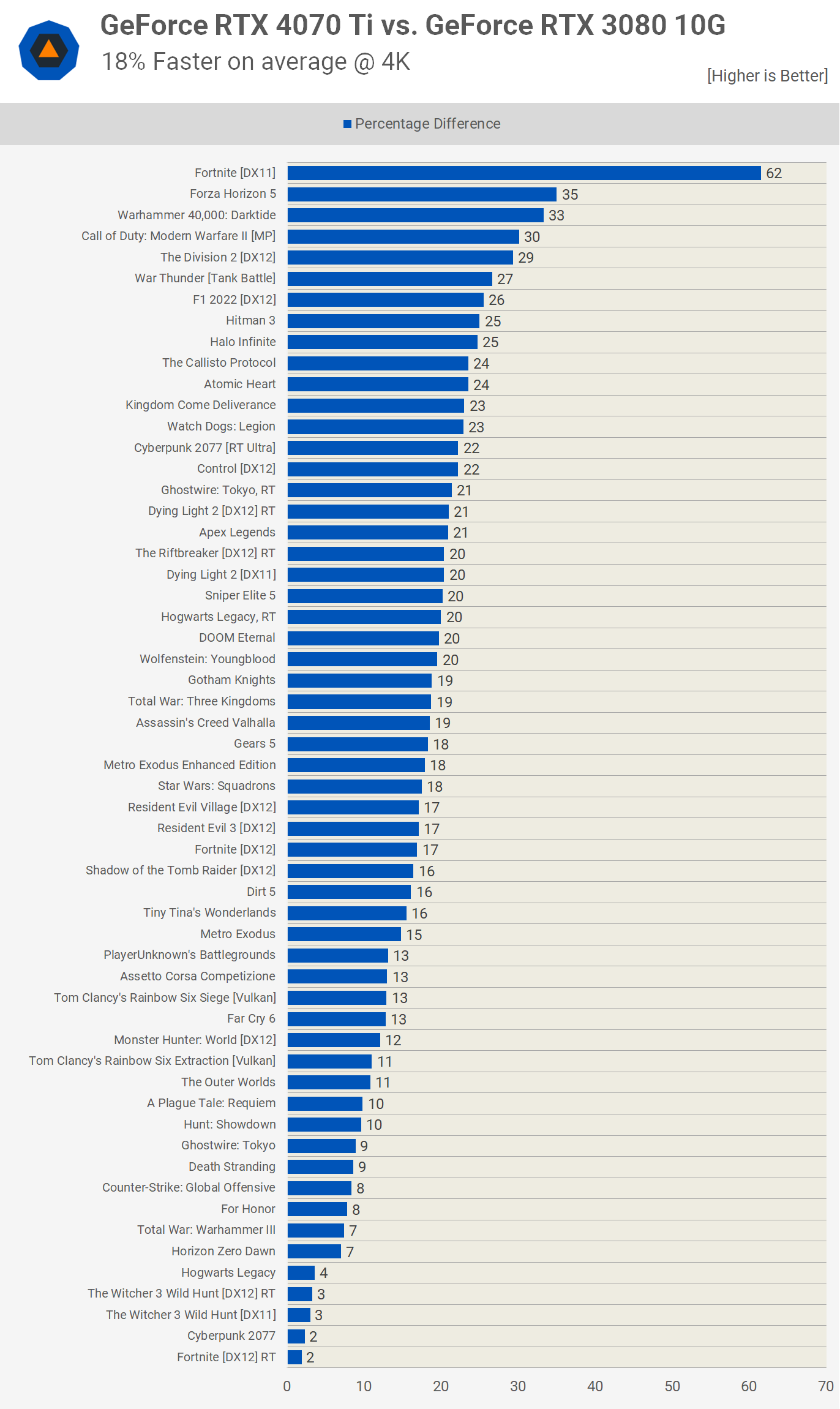

At 4K resolution the overall margin is slightly reduced to 18% in favor of the GeForce RTX 4070 Ti. Fortnite using DirectX 11 was the big outlier here, providing the 4070 Ti with a massive 62% win, though titles such as Forza Horizon 5 and Modern Warfare II were also very positive for the newer GPU.

As you'd expect, there were less CPU limited results at 4K, but there were also more instances where the RTX 4070 Ti suffered due to its more limited memory bandwidth.

What We Learned

On average, the GeForce RTX 4070 Ti is 19% faster than the RTX 3080 10GB, depending on the resolution, with margins typically peaking around 30 to 40%. We saw no games where the RTX 3080 beat the 4070 Ti, but there were a few titles where performance was almost the same.

Looking back at our day-one review for the RTX 4070 Ti, we found that on average it was 21% faster than the RTX 3080 10GB at 4K, so despite expanding our testing from 16 to 50 games, we've found the same kind of margins.

This is a somewhat disappointing result that demonstrates how underwhelming this latest GPU generation is from a value perspective. At MSRP, the GeForce RTX 4070 Ti is a mere 5% improvement in cost per frame when compared to the RTX 3080, giving games about the same level of value two and a half years later.

In fact, it's worse than that because at present the RTX 4070 Ti typically costs $850, making it 2% more costly per frame when compared to the older RTX 3080, but hey, at least you get DLSS 3 Frame Generation (that's sarcasm right there). We are sure that's not going to incentivize anyone into spending more on a 4070 Ti or RTX 4080.

You would have to be coming from an RTX 2080 or 2080 Super for the 4070 Ti to make sense. Those used to cost $700 - $800 at MSRP, and while they didn't represent good value when compared to the Pascal generation that came before, going from a 2080 series graphics card to a 4070 Ti for roughly the same money would be a massive upgrade in absolute FPS.

Also as we mentioned in the intro, if you own a much older GPU, if you consider shopping around used, the RTX 3080 is attractive at around $550 on Ebay, as are other similar GPUs in AMD's Radeon family.

As we said in our Radeon RX 7900 XT vs GeForce RTX 4070 Ti comparison, neither of these GPUs represent good value, but they are also as good as it gets today at this performance tier. You could argue AMD and Nvidia are working together to beat gamers into submission, and if that's the case we don't expect the situation to improve. It's likely GPU upgrades at the same price point are only going to make sense every 5 years or so, or at least that's how things currently stand.

In other words, that means GeForce RTX 3080 owners will be best off holding out for at least another generation, and if games in a year or so are hitting that 10GB VRAM buffer too hard, you'll have to accept lowering texture settings, which most will probably find acceptable given its age at that point. And that's going to do it for this GPU comparison.