It's time for a new GPU mega benchmark, comparing the new Radeon RX 7900 XT head to head with the GeForce RTX 4070 Ti in 50+ games, though some titles have been tested using multiple configurations, with and without ray tracing for example, so there's a lot to go over.

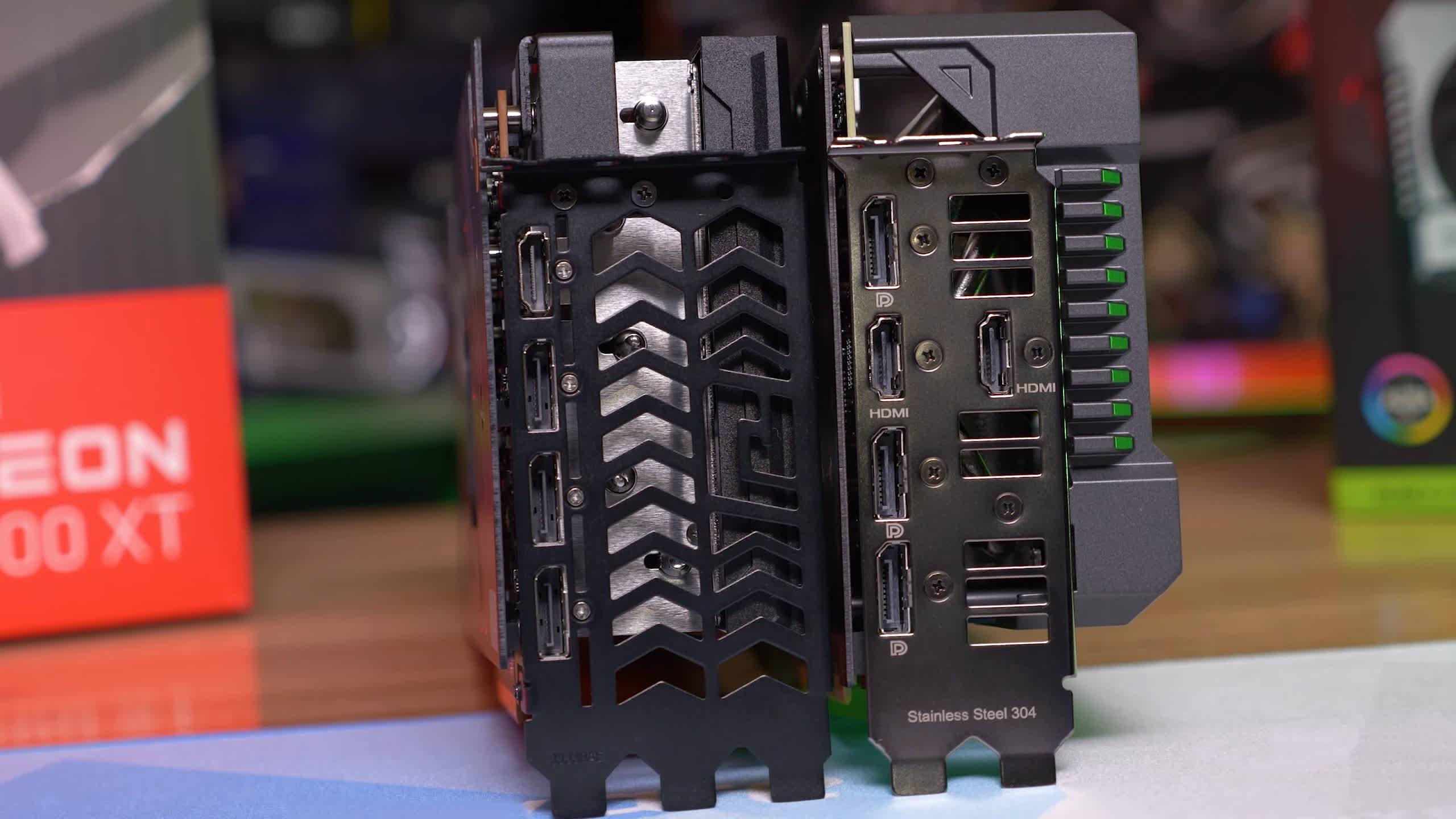

For this shootout we've got our hands on the massive PowerColor Hellhound Radeon 7900 XT which can currently be had for $830, which is actually $70 below the MSRP, so that's nice. In fact, quite a few 7900 XT's are now selling below MSRP with some as low as $800, which makes you wonder why AMD didn't just start at this more reasonable price point to begin with.

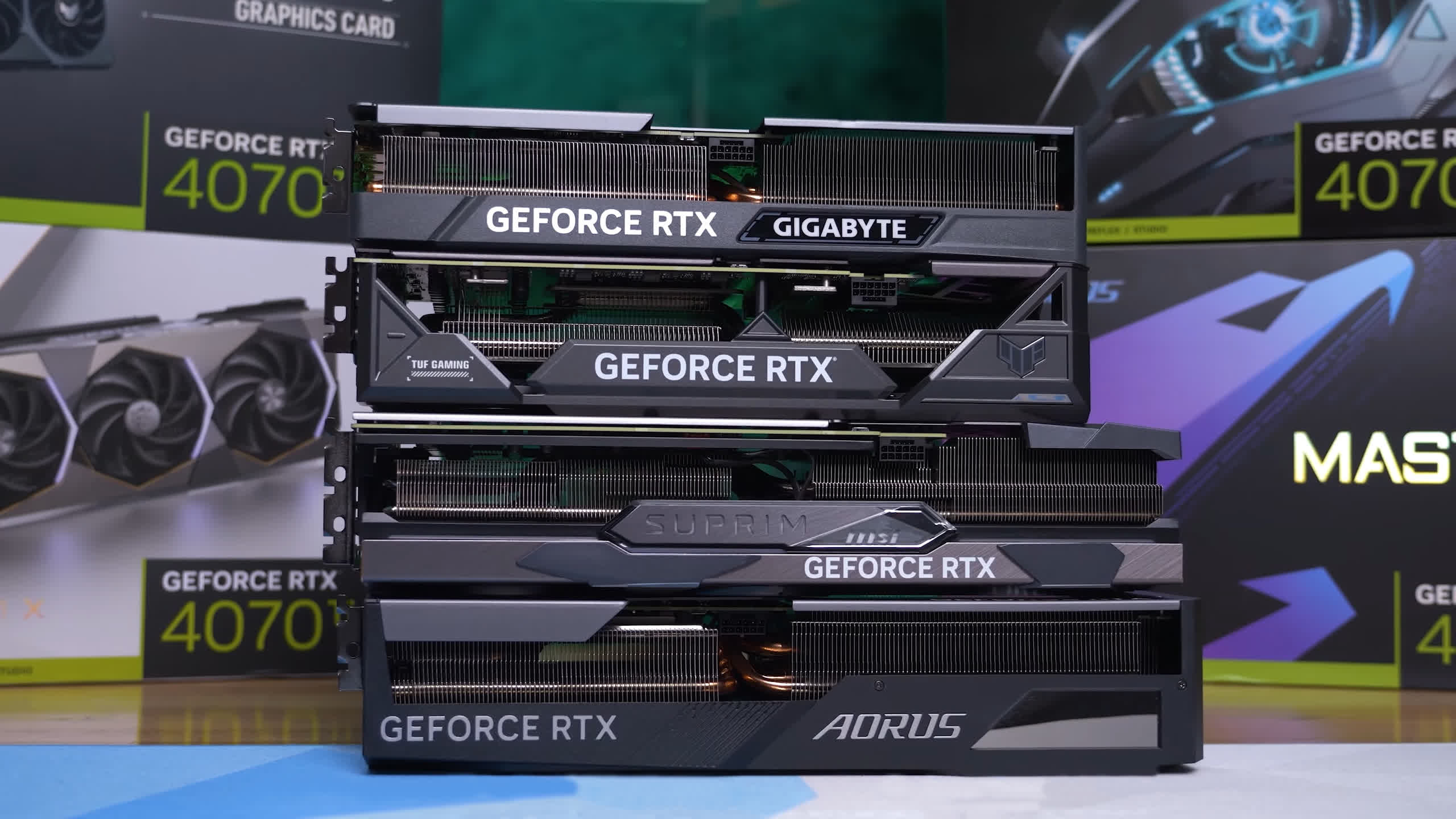

For comparison we have the Asus TUF Gaming RTX 4070 Ti which is one of the more affordable 4070 Ti models, though it's priced above MSRP at $850, a $50 premium there.

Therefore, as it things stand in the market today, there are multiple Radeon 7900 XT models available for $800, while the cheapest 4070 Ti is $830, with most priced at $850 or more. That's not a massive difference, but the Radeon GPU is now cheaper, which is the opposite of launch pricing.

Now, our day-one Radeon RX 7900 XT review wasn't all that favorable and we found it to offer exceptionally poor value at $900. Without question, we felt you could instead spend $100 more on the 7900 XTX which wasn't great value either but was faster and overall better.

The GeForce competition was really no better though. The RTX 4080 set a low bar at $1,200 and you would think AMD would have jumped at the chance to make Nvidia look foolish, but that was not the case. Nvidia then replied to the weak Radeon 7900 XT with an almost equally weak offering, the RTX 4070 Ti at $800.

Even though the GeForce RTX 4070 Ti was underwhelming, its lower price tag placed it in a slightly better position in terms of value compared to the Radeon.

Three months later, it would seem the tables have turned... and now it's the Radeon GPU that's the more affordable option, so it's going to be interesting to see how they stack up in terms of performance using the latest drivers across a massive range of games.

For testing we used a system powered by the Ryzen 9 7950X3D, but with the second CCD disabled to ensure maximum gaming performance – essentially we're testing with the yet to be released 7800X3D. We used a Gigabyte X670E Aorus Master motherboard using 32GB of DDR5-6000 CL30 memory and the latest AMD and Nvidia display drivers.

As usual, we'll go over the data for around a dozen games before jumping into the big breakdown graphs. The resolutions of interest here are 1440p and 4K, so let's get into it.

Benchmarks

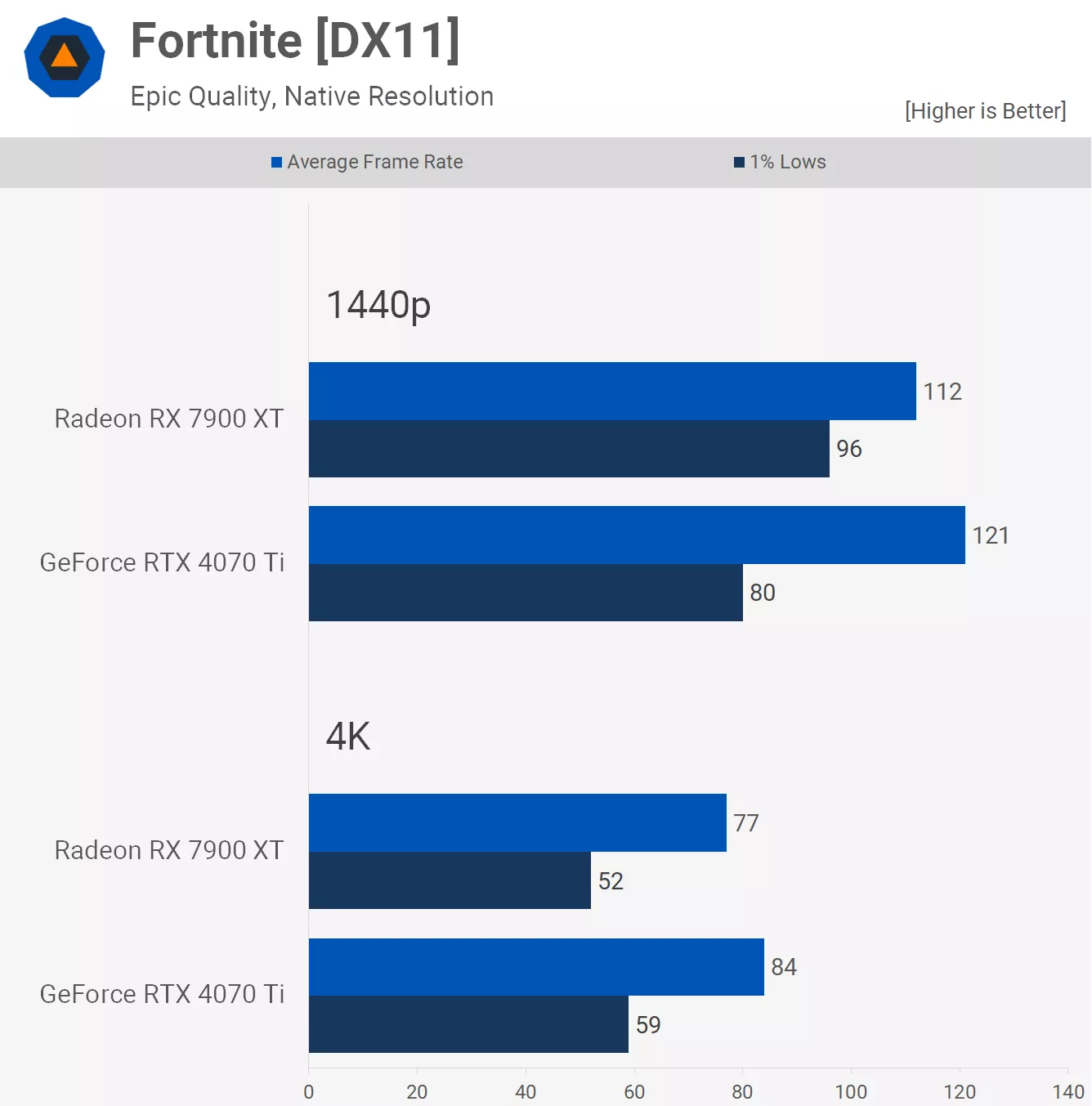

Starting with Fortnite using the Epic quality preset in the DirectX 11 mode, we find that the GeForce RTX 4070 Ti is the faster GPU in this popular title, though frame time consistency isn't nearly as good as what we see with the Radeon 7900 XT with a much larger variance between the average frame rate and 1% lows.

As a result, the GeForce RTX 4070 Ti was 8% faster on average at 1440p, but 17% slower when comparing 1% lows.

The performance trends at 4K change though, and now the RTX 4070 Ti is the clear winner, delivering 9% greater average frame rates with 13% greater 1% lows.

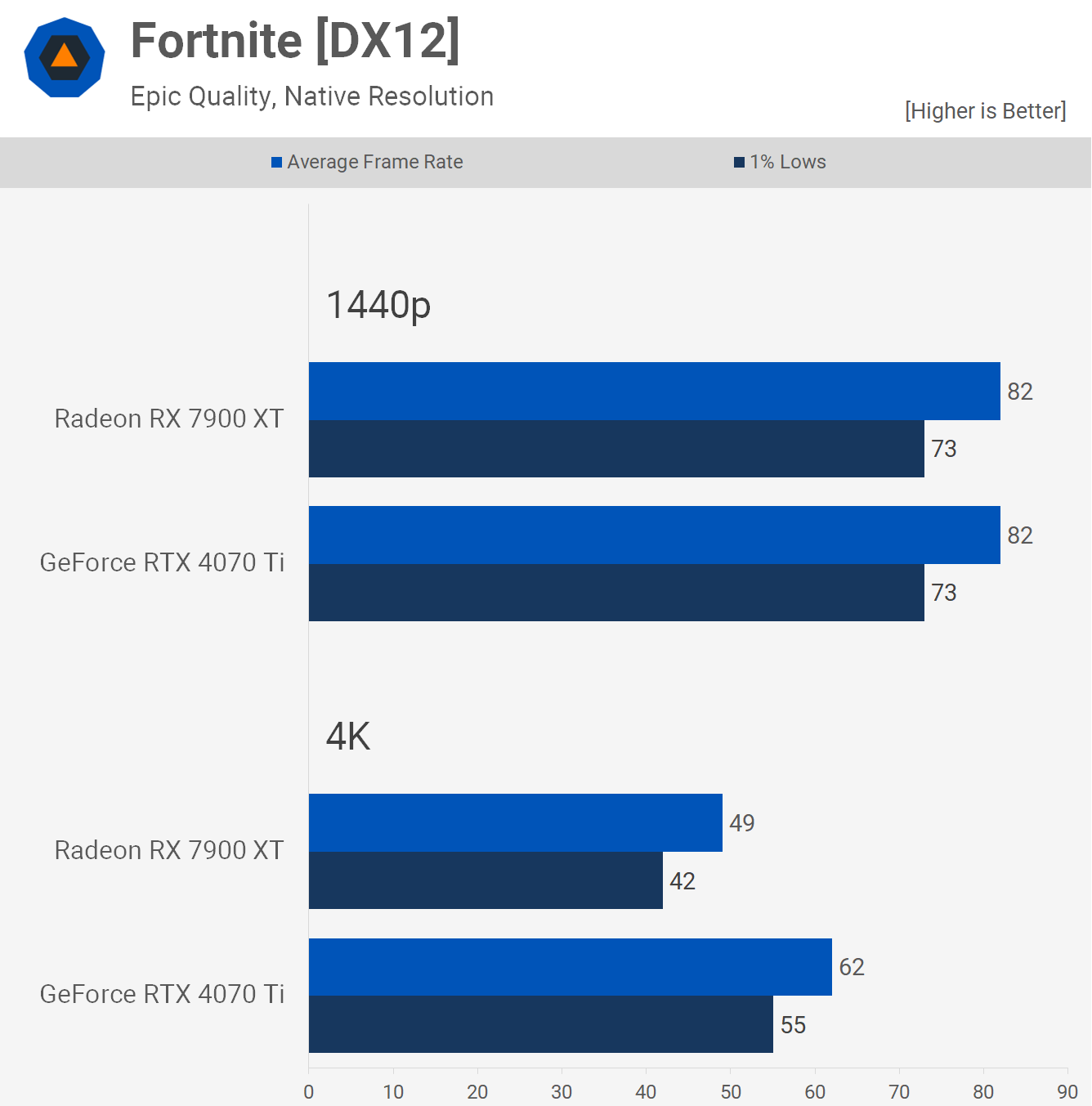

Using the same visual quality settings but moving to DX12, we see a massive decline in performance, especially at 1440p where the RTX 4070 Ti is a little over 30% slower (compared to itself running on DX11).

Some readers have commented in the past that the game runs better using DX12 opposed to DX11, but that's certainly not the case with the Epic quality settings and a powerful CPU. It's also possible those of you with a slower CPU will find DX12 to be faster, but typically we expect you'll find more frames when using DX11.

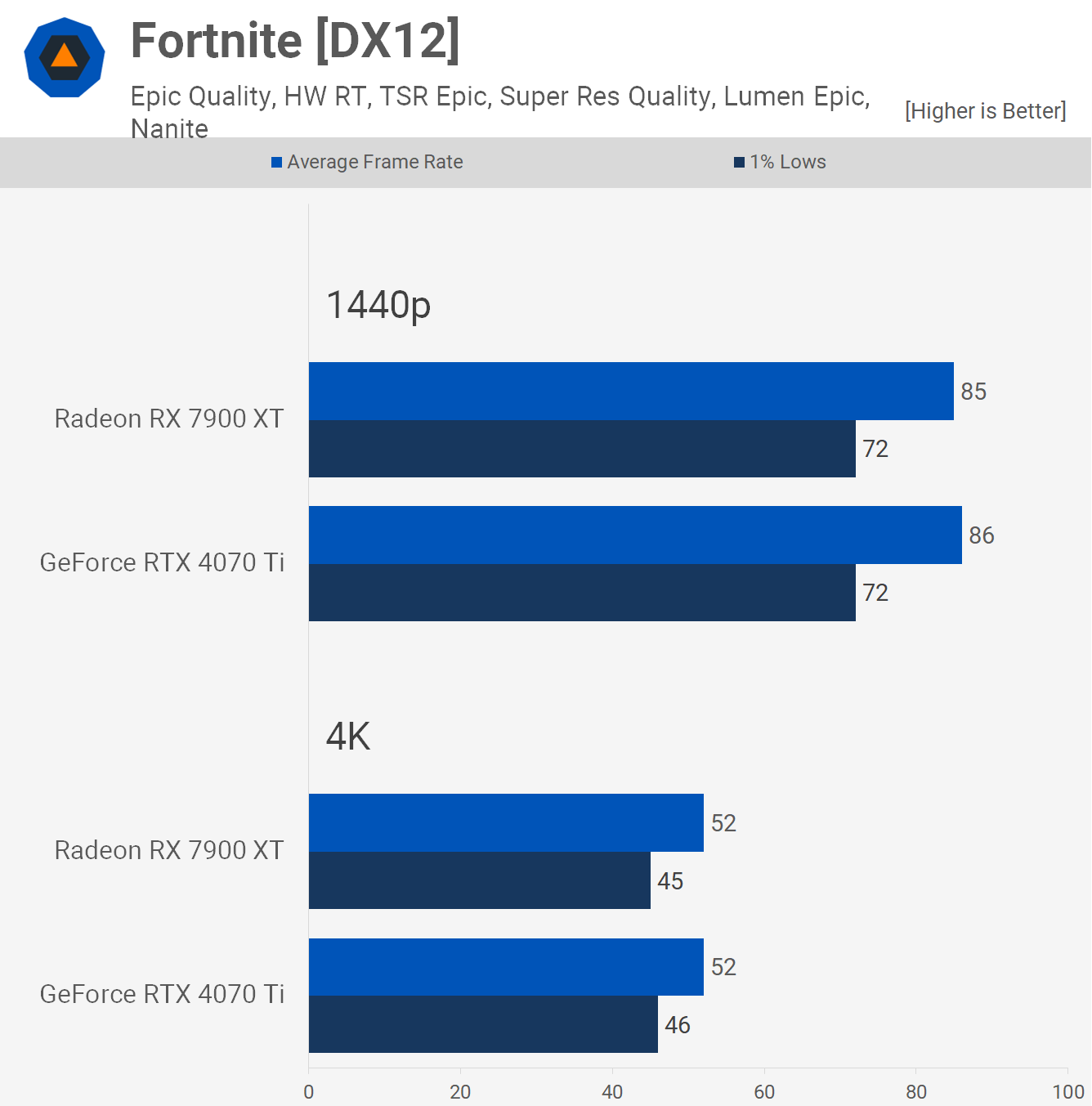

Now with hardware ray tracing enabled (along with Lumen and Nanite), we find that performance is very similar using either GPU.

This means that for one of the most impressive examples of ray tracing we have to date, RDNA 3 is able to match Ada Lovelace. Therefore it's going to be very interesting to see how these two architectures compare in future Unreal Engine 5 titles when using ray tracing.

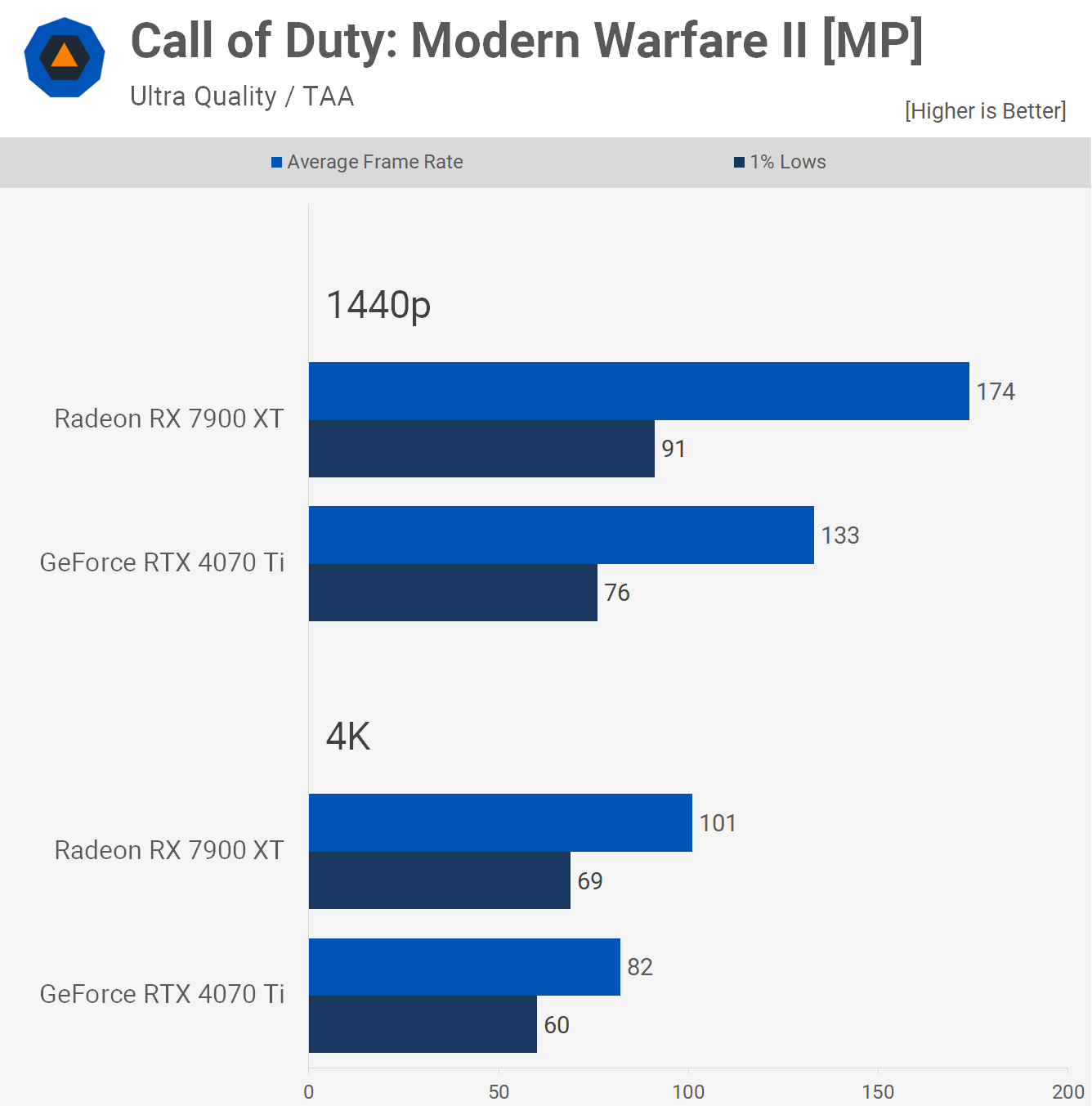

Moving on to Call of Duty: Modern Warfare II, we've dropped the 'Basic' quality preset testing from this comparison as some people felt testing this title twice with two very different quality presets was only giving AMD an advantage, and this was despite the second result accounting for less than 1% of the overall result (there were 62 data points). But anyway, scaling is the same, so we might as well just focus on the Ultra data.

Here the Radeon 7900 XT blasted the RTX 4070 Ti by a 31% margin at 1440p, pumping out 174 fps on average. Then at 4K, the Radeon GPU was faster, this time by 23%, going from 82 fps to 101 fps.

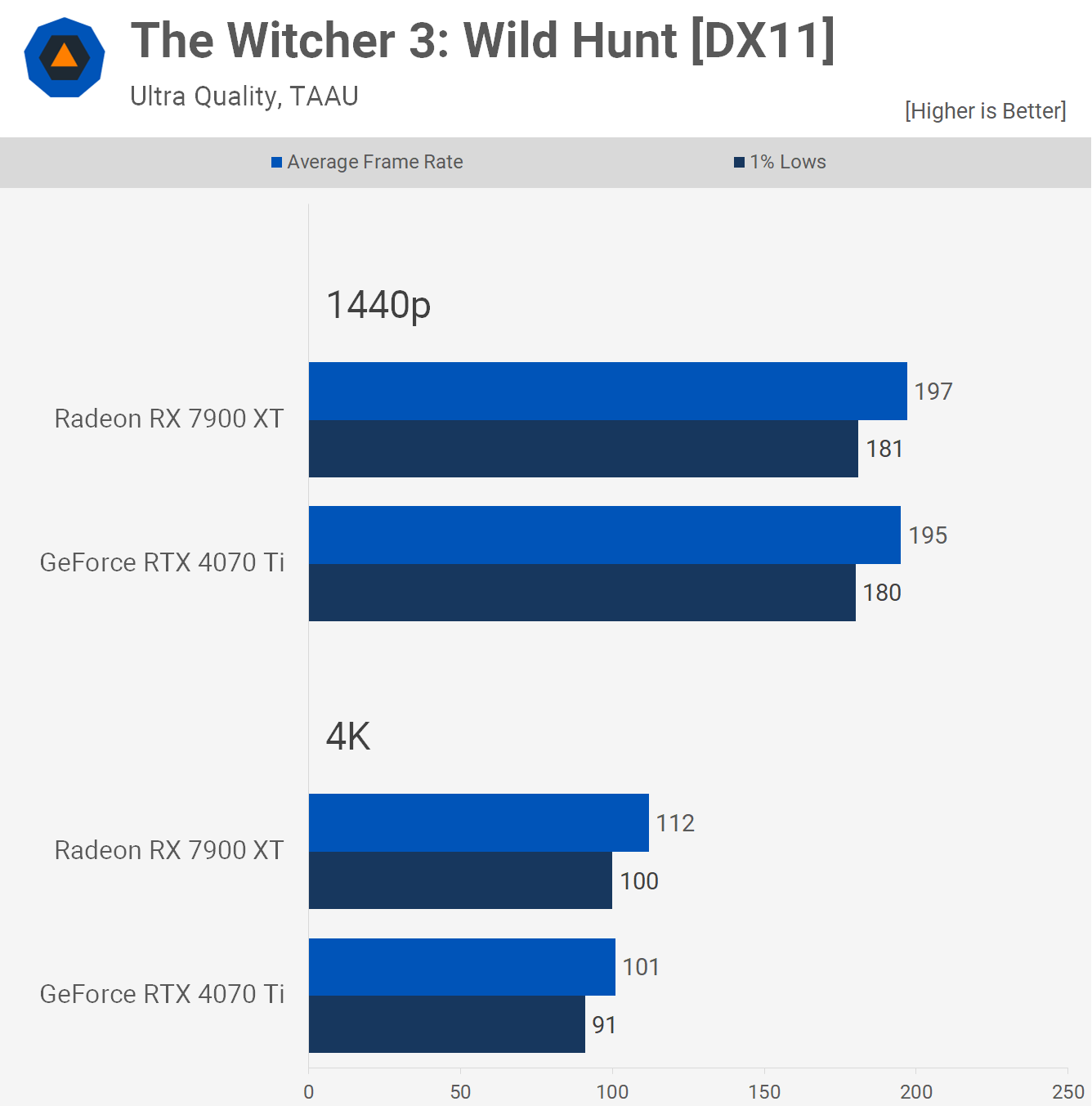

Next up we have The Witcher 3: Wild Hunt using the ultra quality preset, but with the new ray tracing effects disabled. Both GPUs pumped out just shy of 200 fps at 1440p and then over 100 fps at 4K, though here the 7900 XT was 11% faster.

Good performance overall as both GPUs deliver a highly enjoyable gaming experience.

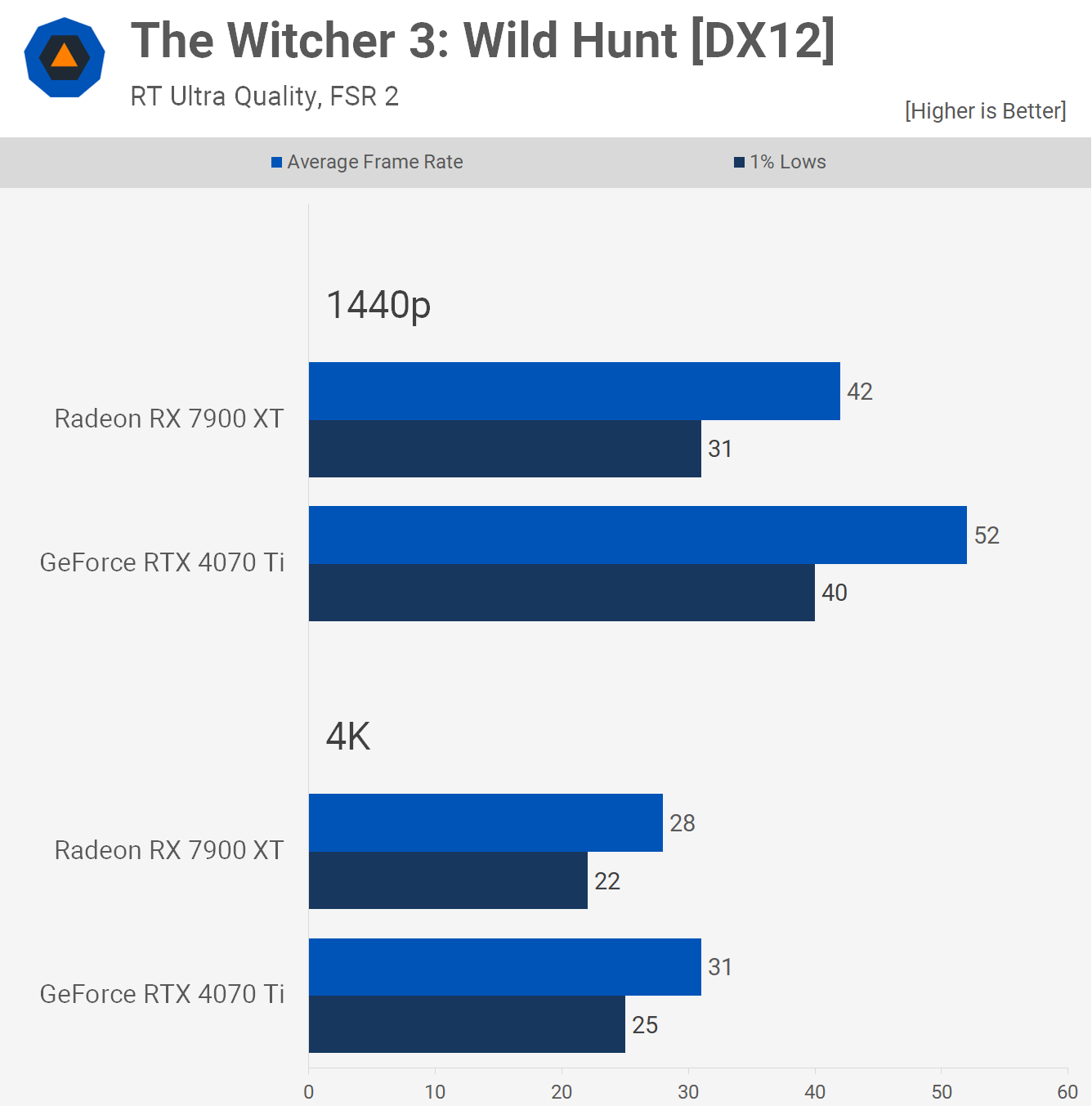

The Witcher 3 again, but with ray tracing enabled, we've also gone ahead and enabled upscaling, using FSR 2 quality, as this allows for an apples to apples comparison.

Even with the help of upscaling, neither GPU could manage even 60 fps at 1440p, meaning performance was around 4x greater with RT disabled, that's a shocking difference and personally I couldn't justify enabling RT in this title.

If you do want to use ray tracing the RTX 4070 Ti is the better choice, delivering 24% more performance at 1440p. I think it's safe to ignore 4K results, unless you're happy with a sad console-like 30 fps, on your premium and very expensive GPU.

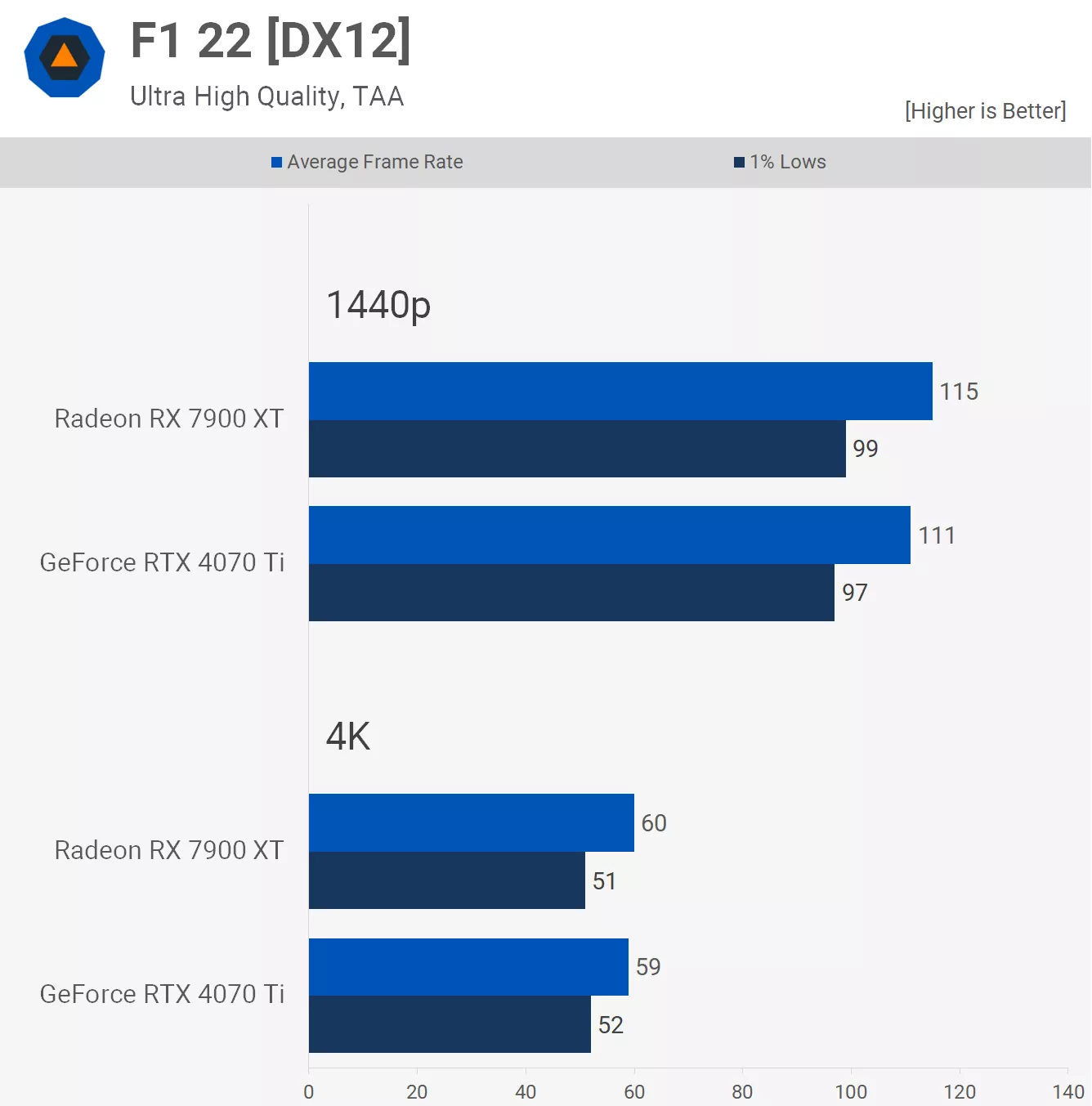

Moving on to F1 22, we tested this game exclusively with ray tracing enabled as that's the default when using the Ultra High preset. For those of you after more frames, your first stop should be to disable RT effects as it does massively boost frame rates for only a minor downgrade in visuals.

In any case, frame rates were decent with over 100 fps at 1440p and 60 fps at 4K, and both GPUs enabled the same level of performance.

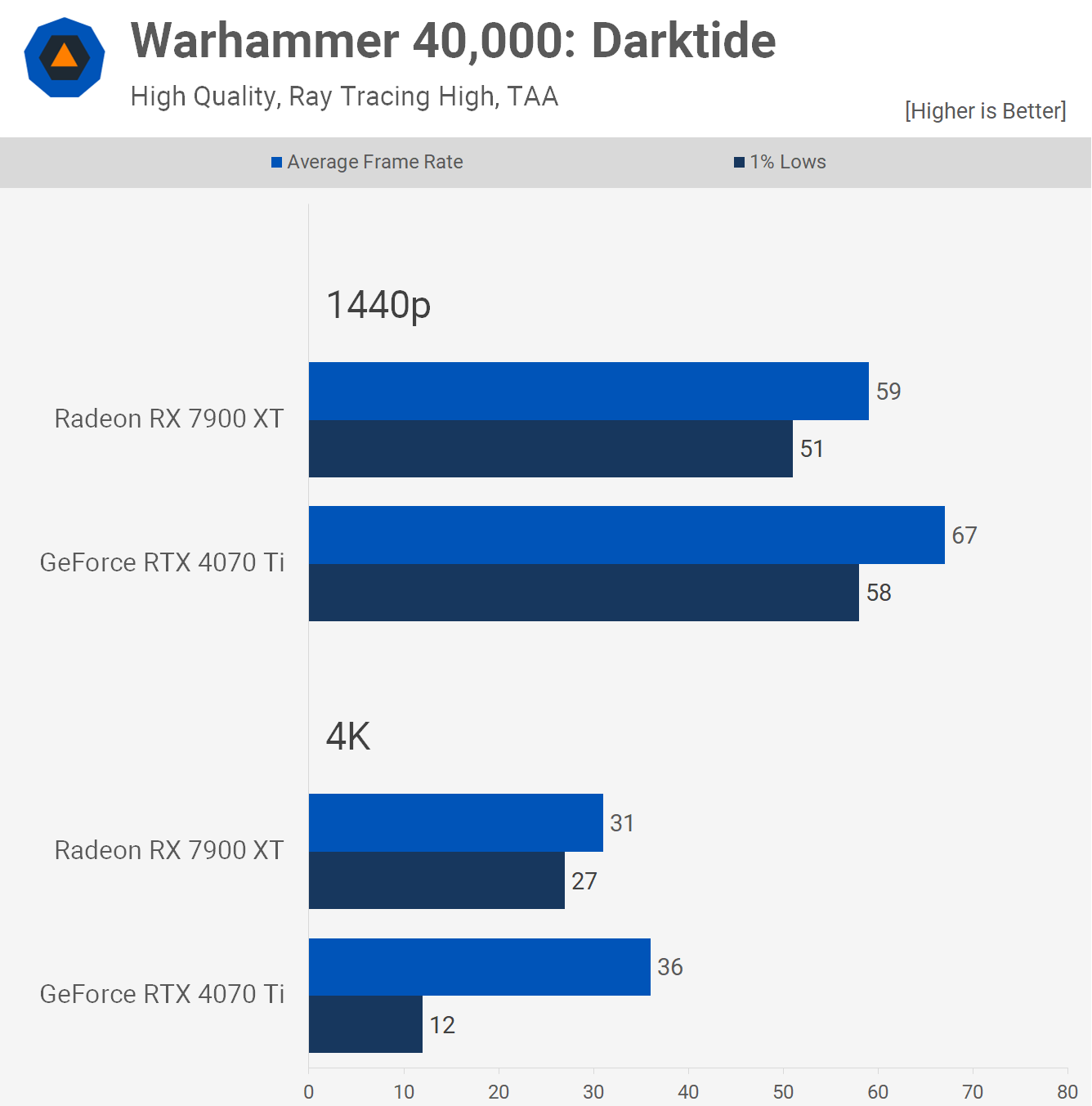

Warhammer 40,000: Darktide was also benchmarked with ray tracing enabled and this provided the 4070 Ti with a 14% performance advantage at 1440p, but did seem to break performance at 4K, seen when looking at the 1% lows as we're exceeding the VRAM buffer here.

Still, the Radeon 7900 XT only managed 31 fps, and that's not exactly a win in my book.

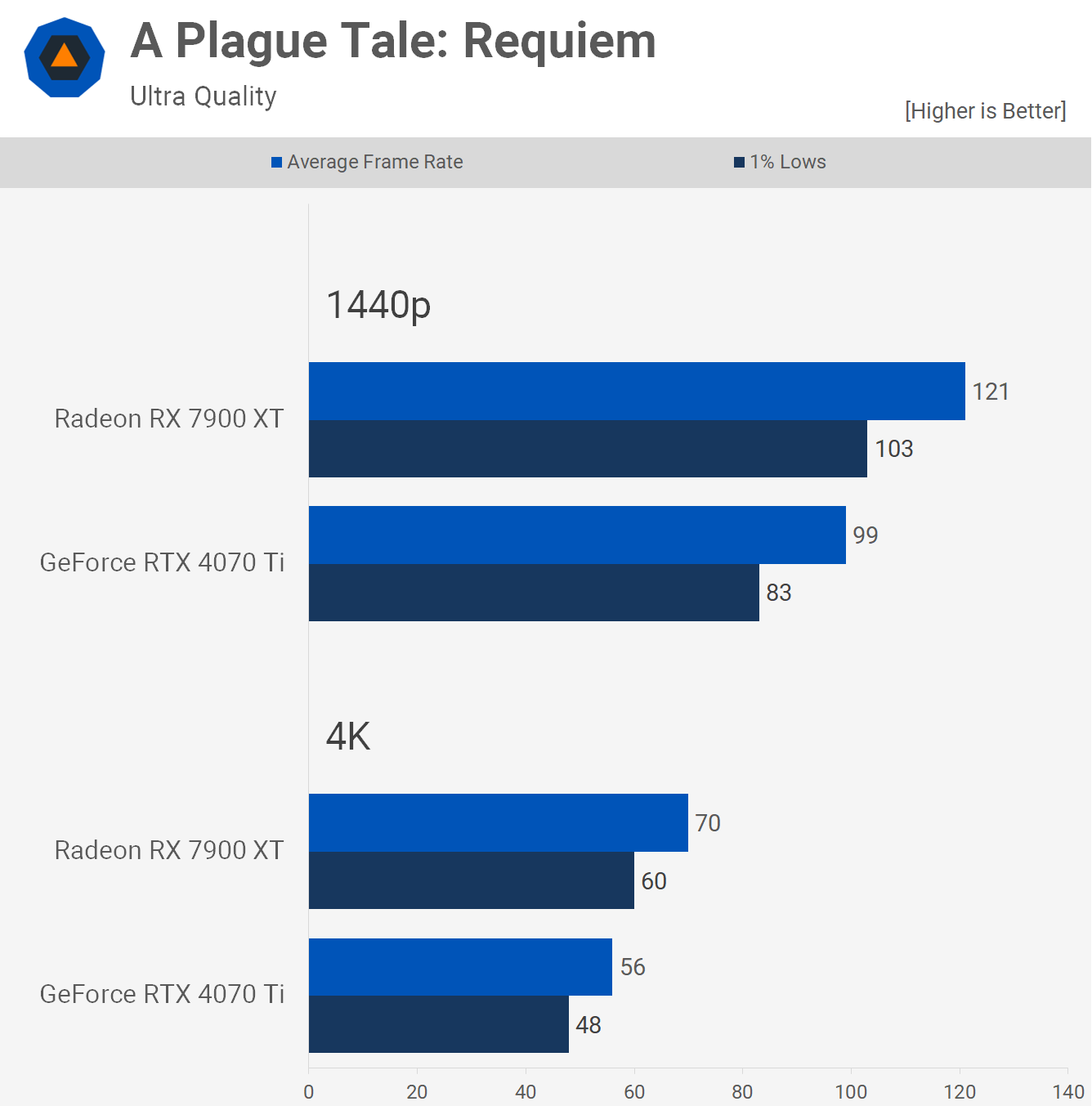

A Plague Tale: Requiem was tested using the ultra quality preset and here the Radeon 7900 XT was much faster, delivering 22% more frames at 1440p and 25% more at 4K. A comfortable win for AMD in this new game.

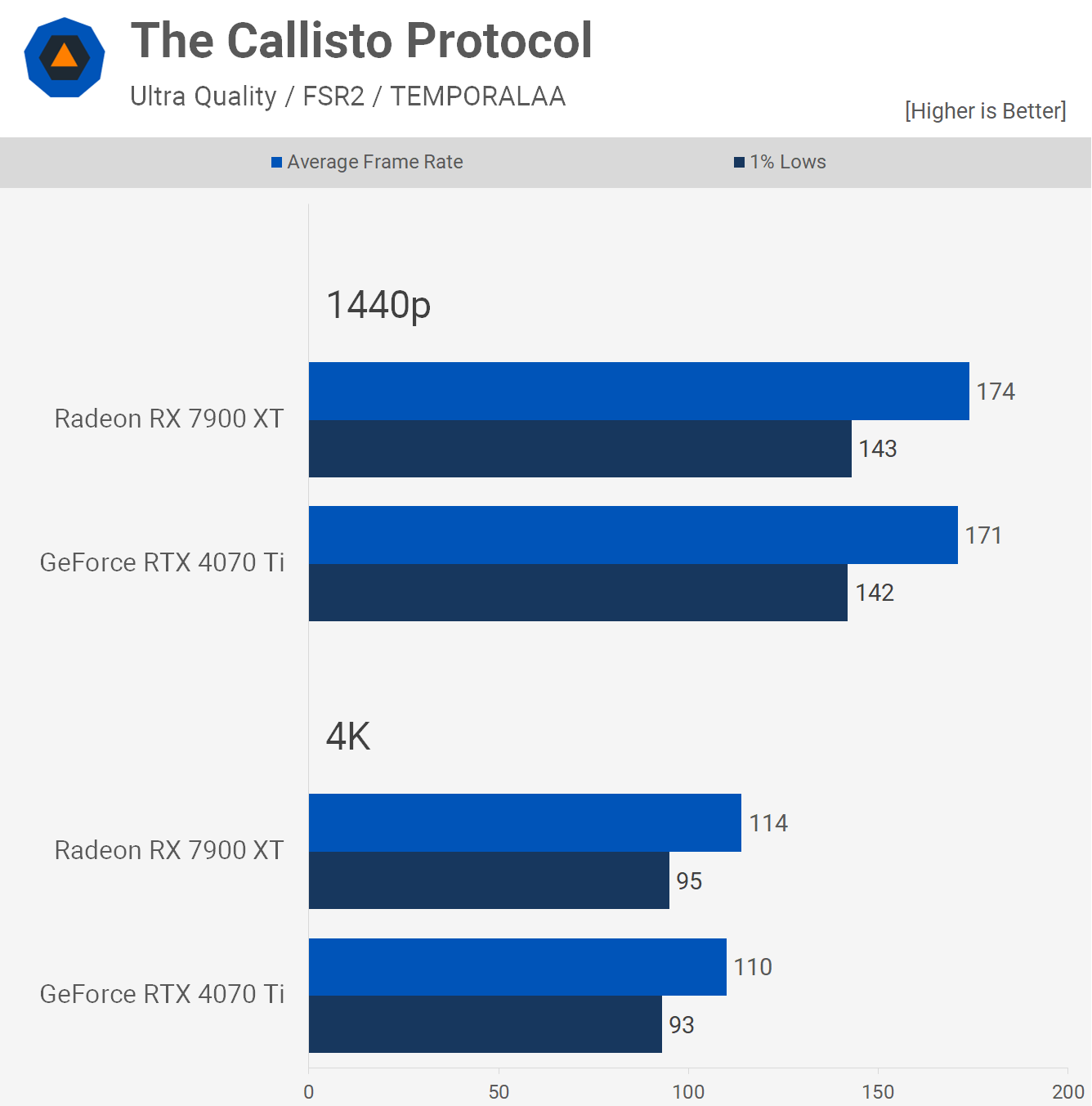

The Callisto Protocol is another new title and this one enables FSR 2 quality by default when using the ultra quality preset. We didn't change the setting and as a result both GPUs were good for over 170 fps at 1440p and over 100 fps at 4K.

The Radeon 7900 XT was a smidgen faster but overall performance was the same across both GPUs.

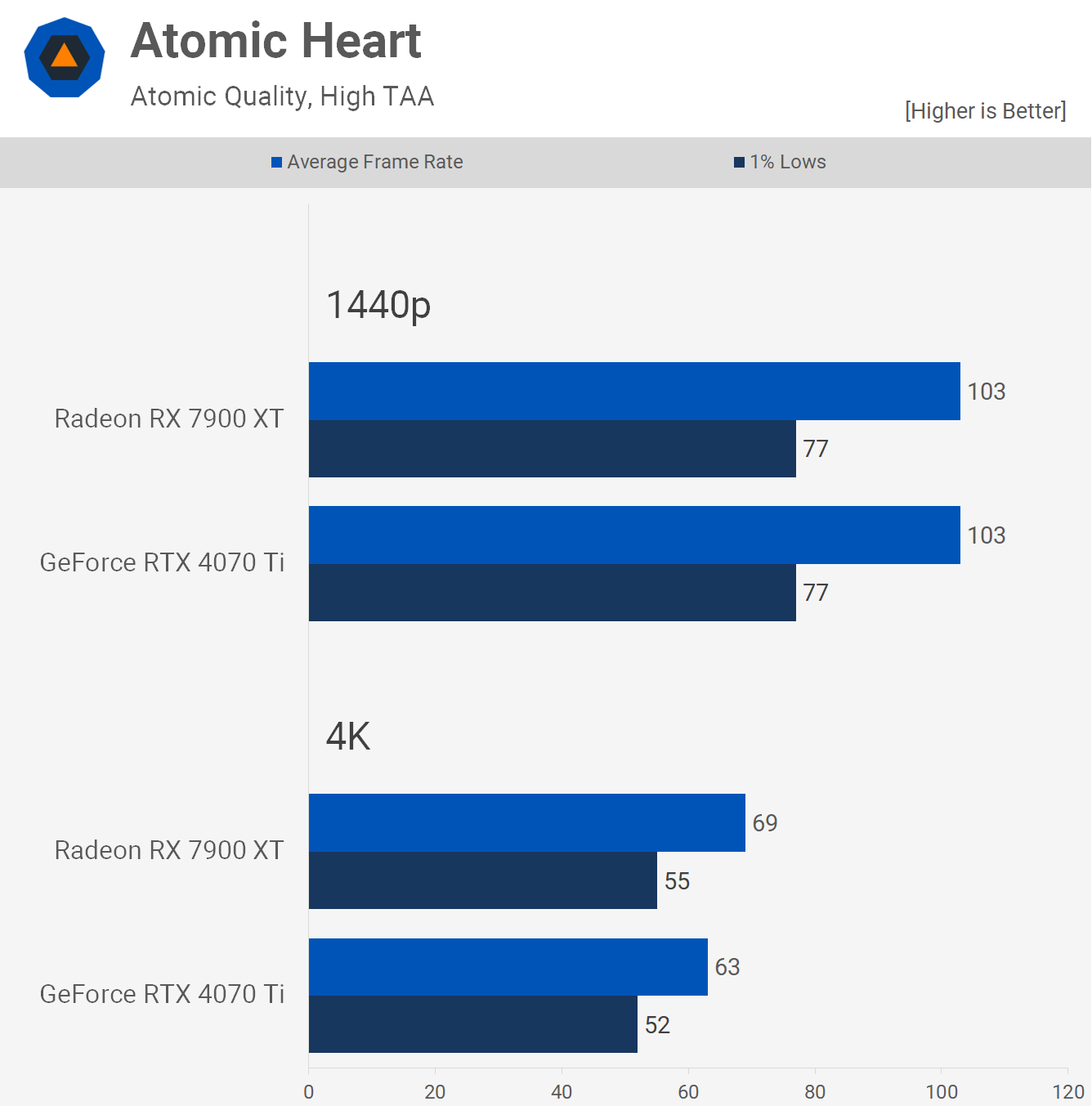

Atomic Heart is the most recently released game we're testing with and it's yet another title built using Unreal Engine 4.

At 1440p both GPUs delivered 103 fps using the maximum visual settings and then just over 60 fps at 4K where the Radeon 7900 XT was 10% faster.

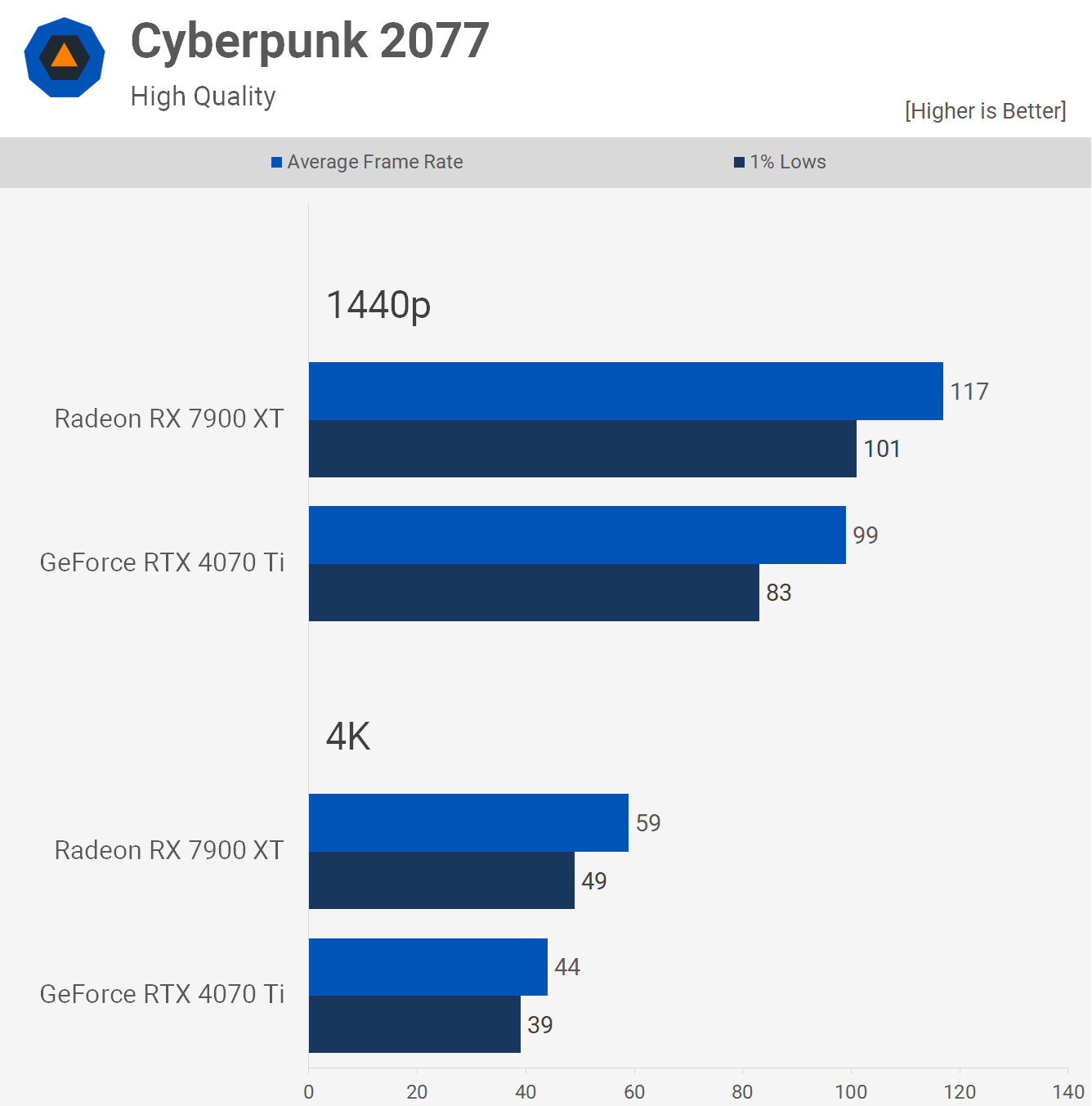

Cyberpunk 2077 was tested using the high quality preset with upscaling manually disabled, though we also have some RT results which we'll look at in a moment.

At 1440p, the Radeon 7900 XT was 18% faster than the 4070 Ti, rendering 117 fps. We then see that margin blow out to 34% at 4K as the GeForce GPU was good for just 44 fps opposed to 59 fps for the 7900 XT.

Now with ray tracing enabled along with FSR 2 quality for some upscaling, we find dramatically different results. At 1440p frame rates for the Radeon 7900 XT drop by a staggering 48% and that's with upscaling enabled.

The GeForce RTX 4070 Ti saw a much more modest 14% decrease in performance and as a result was capable of delivering well over 60 fps at 1440p.

The 4K performance results are even more interesting. The Radeon 7900 XT saw a 44% decrease in frame rates, whereas the GeForce RTX 4070 Ti delivered the same level of performance as it did with ray tracing disabled, and this is possible thanks to upscaling.

So the Radeon went from 34% faster at 4K with ray tracing and upscaling disabled, to 25% slower with both turned on.

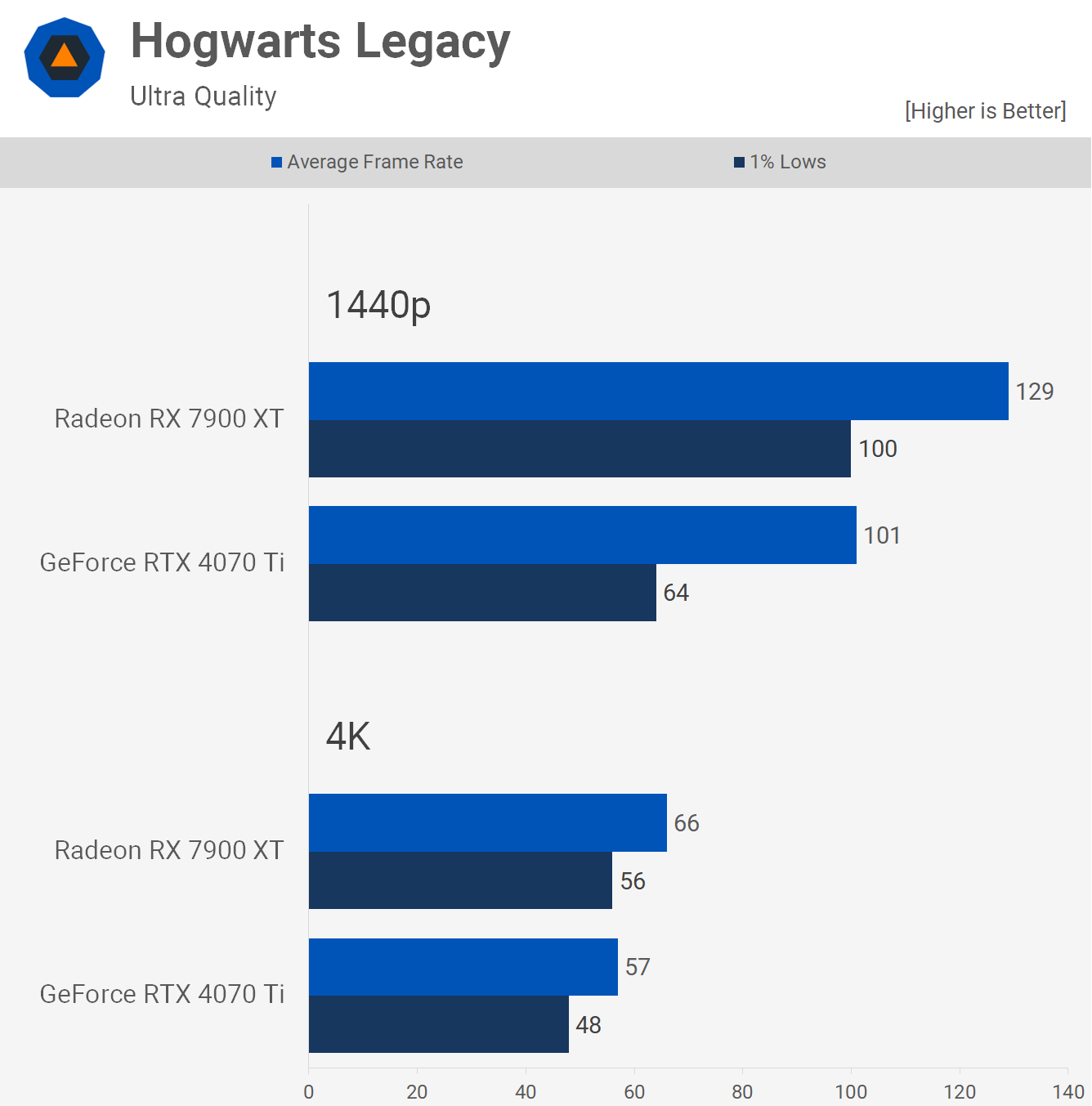

Lastly, we have everyone's favorite video game, Hogwarts Legacy.

The Radeon 7900 XT rips the GeForce RTX 4070 Ti a new one here, delivering 28% more frames at 1440p with a staggering 56% increase for the 1% lows. The margins close up at 4K and now the Radeon 7900 XT is just 16% faster, which admittedly is still a significant margin, but nothing like what was seen at 1440p.

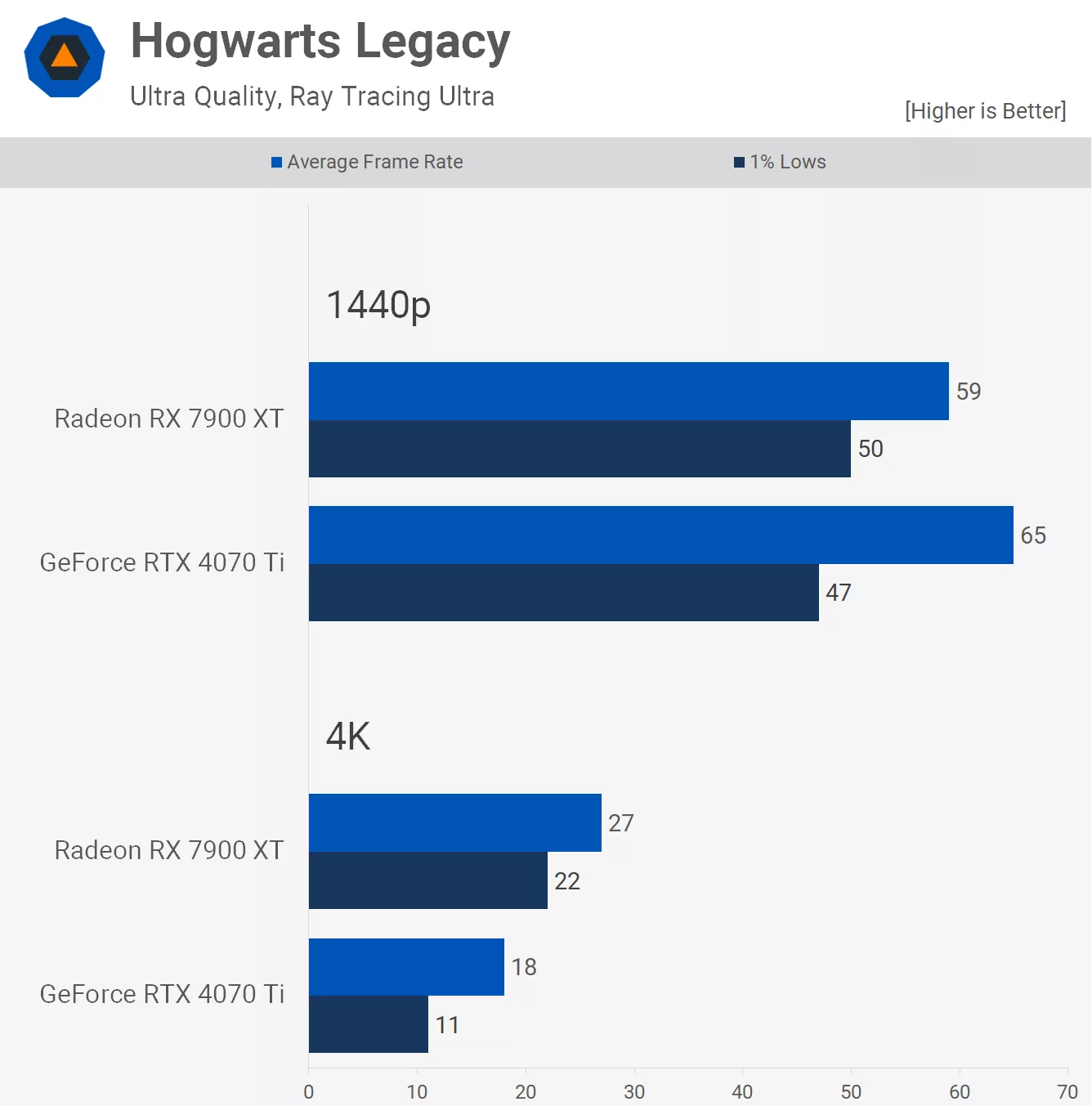

Enabling ray tracing changes the picture quite a bit – not so much in the game's graphics though, depending on where you are – but it certainly changes how our graphs look.

The Radeon 7900 XT no longer dominates the RTX 4070 Ti and in fact at 1440p is now 9% slower with just 59 fps on average. That's a massive 54% reduction in FPS for the 7900 XT when enabling ray tracing and a 36% decrease for the 4070 Ti.

The GeForce RTX 4070 Ti does run into VRAM related performance issues at 4K, and while the Radeon 7900 XT is able to avoid such issues, with just 27 fps on average it was hardly a desirable result.

Performance Summary

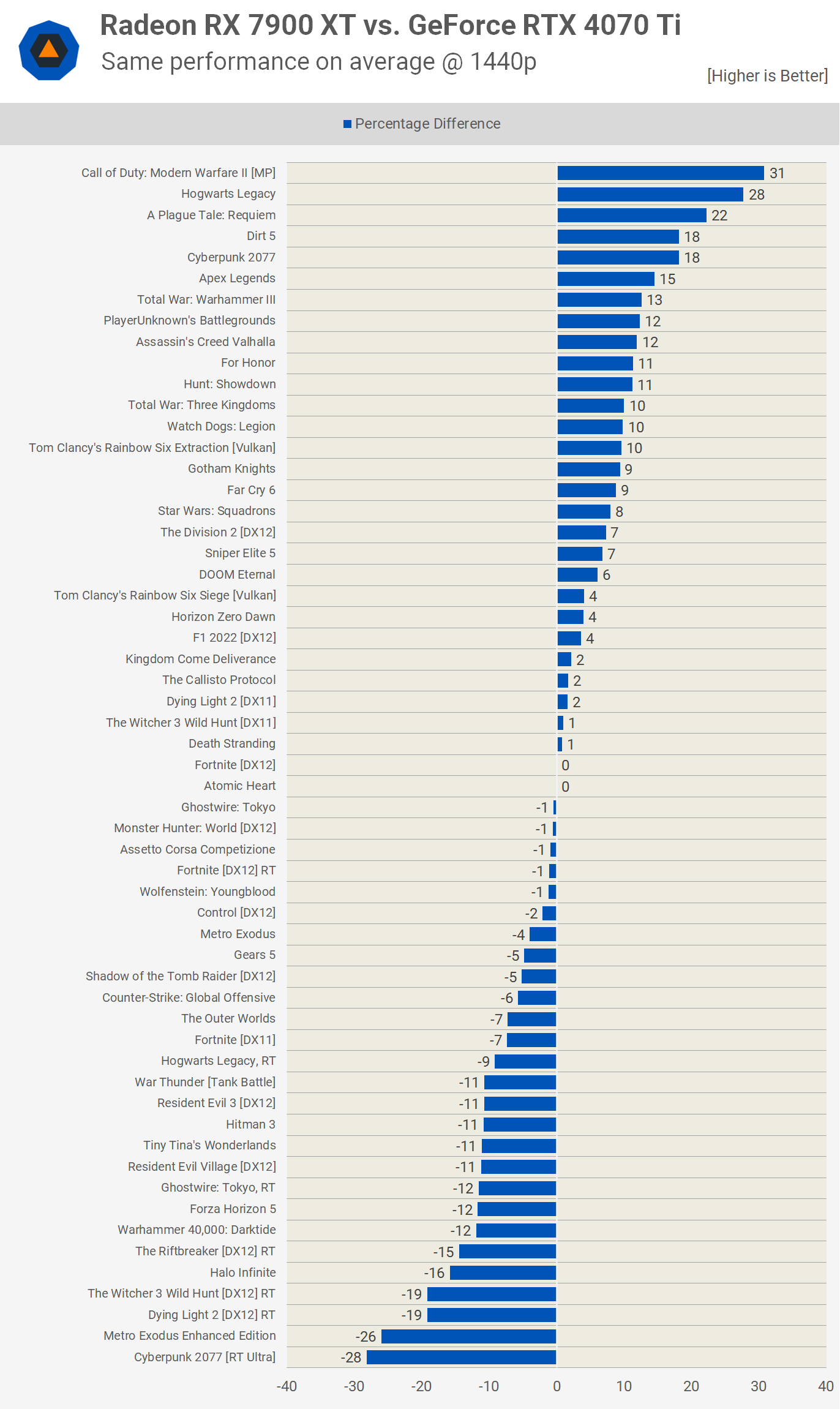

After taking a detailed look at a small sample of the 50 games we tested in total, let's now compare the GeForce RTX 4070 Ti and Radeon RX 7900 XT across all titles we spent the past week benchmarking. Starting with the 1440p data...

Well, that's rather unexciting isn't it? After a massive round of benchmarking, we've found that the 7900 XT and 4070 Ti are neck and neck at 1440p on average. Though that shouldn't come as a surprise as the Radeon 7900 XT was just 4% faster on average in our day-one review where we separated the rasterization and ray tracing results.

With many more ray tracing results included in this comparison, the margins don't change dramatically, so it's fair to say the 7900 XT and 4070 Ti are very similar overall.

Of course, we still see performance swings of around 30% in either direction: Modern Warfare II, Hogwarts Legacy with RT disabled, and A Plague Tale Requiem are all great titles for the Radeon. Whereas Cyberpunk 2077 with RT, Metro Exodus Enhanced Edition, which of course uses RT, Dying Light 2 with RT, and The Witcher 3 with RT enabled are all performing better on the GeForce GPU.

While it's clear that the GeForce RTX 4070 Ti typically does better with RT enabled, that's not always the case. A good example is Fortnite where performance was basically a match. Therefore, moving forward ray tracing performance between these two GPUs could be a lot more competitive, especially for games using Unreal Engine 5.

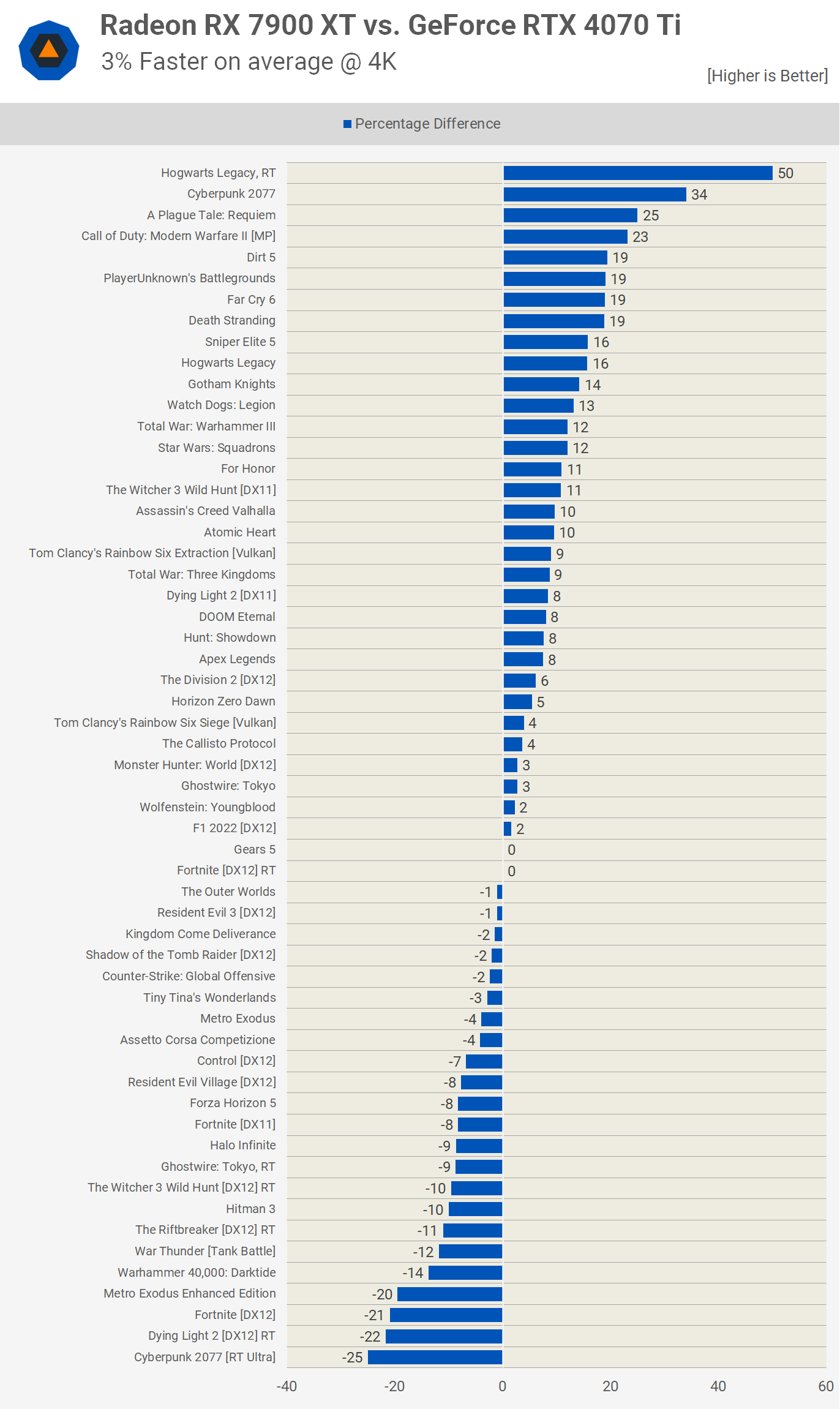

Looking at 4K results now, in our day-one review the Radeon 7900 XT was 8% faster than the GeForce RTX 4070 Ti on average, but here with many more games and a lot more ray tracing results, it's just 3% faster. A technical win for the Radeon, but anything within 5% can be considered a draw in our opinion.

What We Learned: A Hard Choice to Make

There you have it, that's the Radeon RX 7900 XT vs. the GeForce RTX 4070 Ti in a massive sample of games. Overall they are very close in terms of performance, the 7900 XT generally does better with ray tracing disabled – Hogwarts Legacy, The Witcher 3, Dying Light 2, and Cyberpunk 2077 all good examples of that – but there are signs that ray tracing performance in future titles using Unreal Engine 5 could be a lot more competitive, as seen in Fortnite.

Still, if you're keen on ray tracing performance then the GeForce RTX 4070 Ti is the more attractive product. You will have to stomach the performance hit, which we know not all of you are willing to do, but in any case, in the vast majority of ray tracing-enabled titles we have today, the RTX 4070 Ti puts on a better show.

There's also the matter of upscaling... DLSS remains king in that area and while it's not always head and shoulders better than FSR, it is an advantage of the GeForce series.

Also read: Enhance and Upscale: FSR 2.2 vs DLSS 2.4 Analysis

On that note, we're sure there will be some Nvidia fans upset that we didn't test with DLSS enabled, but there's a good reason for that: if we had used DLSS, we would have had to use upscaling for the Radeon GPU as well, but then we run into an apples to oranges comparison, both in terms of image quality and performance.

It was simply easier and more accurate (for the purpose of a comparison) to test both GPUs with the same upscaling technology. I'm sure that makes sense to most of you.

Power consumption is also very similar between the two. Generally the Radeon uses more power, but it's also faster in most of those examples, so performance per watt is much the same and there's not a big enough difference to matter.

A potentially significant difference is that the Radeon 7900 XT packs 20 GB of VRAM compared to just 12 GB in the GeForce RTX 4070 Ti. Today we'd consider 12GB as a minimum memory spec for gaming, and a capacity that's more at home on a mid-range or even entry-level graphics card. For $800 you'd expect 16 GB to be the minimum, so the Radeon 7900 XT and its almost 70% increase in VRAM, should be far better equipped for future titles.

But as we observed in our testing, 12GB remains enough for the vast majority of games today, all of them under playable conditions. We've seen how poorly high-end 8GB models such as the RTX 3070 Ti have aged, and we don't expect the 4070 Ti to be much better in that regard.

As for display drivers, we know people like to rag on AMD and maybe rightfully so in some instances. But lately Nvidia has had just as many driver related issues and their GeForce Experience is kind of rubbish, not least of all because you need an account to login. The interface is now very old, it's in desperate need of an overhaul and features for stuff like ShadowPlay are severely lacking when compared to Radeon ReLive.

Ultimately, at around $800 to $850 we're none too impressed with either GPU and the difference in price is not huge to make or break the comparison either. On that note, we'll quickly revisit cost per frame and what this data really highlights is how much AMD screwed up with the ridiculous $900 asking price of the 7900 XT at launch.

Had they started at $800 our review would have looked very different, and although we still believe this should be a $700 product, $800 is a lot easier to stomach. Not only that, but at $800 the Radeon 7900 XT is better value than both the Radeon RX 6800 and 6800 XT at their MSRP, which is always good to see.

The GeForce RTX 4070 Ti was meant to cost $800, but right now $850 or more, which places it roughly on par with the RTX 3080 10GB at its MSRP, which isn't exactly moving the needle forward.

When compared to previous generation GPUs, the Radeon 7900 XT is mildly attractive at $800, while the RTX 4070 Ti is a nothingburger at $850. These GPUs are likely going to occupy the same price point over the next year or so, and with similar performance and features, we don't think you can go too wrong with either – there's certainly no alternative at this performance tier.

But with $100 knocked off the price, we'd probably go with the Radeon 7900 XT, as we expect that extra VRAM to come in handy down the track. We won't passionately argue for or against either though, they're both solid options in today's market, so review the data and pick the one that makes the most sense for you.